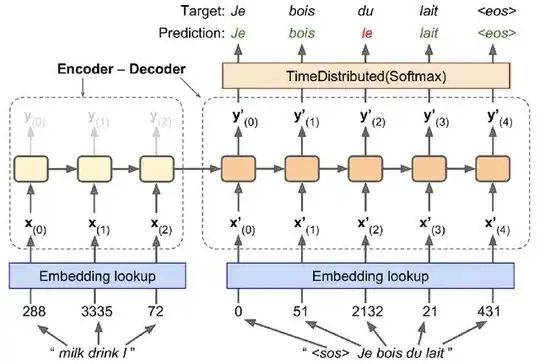

I am reading "Hands-On Machine Learning with Scikit-Learn and TensorFlow" by Aurelion Geron. I am currently reading the Encoder-Decoder section of the book and I stumbled upon some code that I don't fully understand, and I find the explanations from the book to be unsatisfactory (at least for someone like me, a beginner). The following picture presents the model we are trying to implement (or to be more precise, we will implement a model that is similar to the following picture, not exactly this model):

(picture from Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow, chapter 16, page 543, figure 16-3)

This is the code that was used (again, the above model is not the exact thing we are going to code. The author explicitly said that the model we will build is just similar to the above picture):

import tensorflow_addons as tfa

encoder_inputs = keras.layers.Input(shape=[None], dtype=np.int32)

decoder_inputs = keras.layers.Input(shape=[None], dtype=np.int32)

sequence_lengths = keras.layers.Input(shape=[], dtype=np.int32)

embeddings = keras.layers.Embedding(vocab_size, embed_size)

encoder_embeddings = embeddings(encoder_inputs)

decoder_embeddings = embeddings(decoder_inputs)

encoder = keras.layers.LSTM(512, return_state=True)

encoder_outputs, state_h, state_c = encoder(encoder_embeddings)

encoder_state = [state_h, state_c]

sampler = tfa.seq2seq.sampler.TrainingSampler()

decoder_cell = keras.layers.LSTMCell(512)

output_layer = keras.layers.Dense(vocab_size)

decoder = tfa.seq2seq.basic_decoder.BasicDecoder(decoder_cell, sampler,

output_layer=output_layer)

final_outputs, final_state, final_sequence_lengths = decoder(

decoder_embeddings, initial_state=encoder_state,

sequence_length=sequence_lengths)

Y_proba = tf.nn.softmax(final_outputs.rnn_output)

model = keras.Model(inputs=[encoder_inputs, decoder_inputs, sequence_lengths],

outputs=[Y_proba])

There are things in the above code that I don't know what they do, and there are things that I think I know what they do, so I'll try to explain exactly what I am confused about. If I am wrong in anything that I say from this point, please let me know.

We import tensorflow_addons.

In lines 2-4 we create the input layers for the encoder, for the decoder, and for the raw strings. We could see in the picture where these would go. A first confusion arises here: Why is the shape of encoder_inputs and decoder_inputs a list with the element None in in, while the shape of sequence_lengths is an empty list? What are the meaning of these shapes? Why are they different? Why must we initialize them like this?

In lines 5-7 we create the embedding layer and apply it on the encoder inputs and on the decoder imputs.

In lines 8-10 we create the LSTM layer for the encoder. We save the hidden state h and the memory cell state C of the LSTM, as this will be the input into the decoder.

Line 11 is another confusion for me. We apparently create a so called TrainingSampler, but I have no idea what this is or what it does. In the words of the author:

The TrainingSampler is one of several samplers available in TensorFlow Addons: their role is to tell the decoder at each step what it should pretend the previous output was. During inference, this should be the embedding of the token that was actually output. During training, it should be the embedding of the previous target token: this is why we used the TrainingSampler.

I don't really understand this explanation. What exactly does the TrainingSampler do? Does it tell the decoder that the correct previous output is the previous target? How does it do that? And even more imporantly, would we need to change this sampler during inference (since we wouldn't have the targets during inference)?

In lines 12 and 13, we define the decoder cell and the output layer. My question here is why do we define the decoder as LSTMCell, while we declared the encoder as LSTM, not a cell. I read on stackoverflow that LSTM is a recurrent layer, while LSTMCell contains the calculation logic for one step. But I don't understand why we had to use LSTM in the encoder and LSTMCell in the decoder. Why this difference? Is it because in the next line, the BasicDecoder actually expects a cell?

In the next few lines, we define the BasicDecoder and apply it on the decoder embeddings (again, I don't know what sequence_lengths does here). We get the final outputs, which we then pass through a softmax function.

There's a lot going on in that code and I am really confused about what happens. If someone could clear things up a bit, I would be extremely grateful.