I am learning Spark, I have below xml from which I want to read 2 values and create two different columns

<appRoot>

<applist>

<app type="test">

<code>8.52544</code>

</app>

<app type="dev">

<code>8.52537</code>

</app>

</applist>

</appRoot>

I want

if type="test" then it should set the value (i.e. 8.52544) in new column "app_test" and

if type="dev" then it should set the value (i.e. 8.52537) in new column "app_dev"

I tried below

df.select(

functions.when($"applist.app._type" === "test", $"applist.app.code").as("app_test"),

functions.when($"applist.app._type" === "dev", $"applist.app.code").as("app_dev"))

but it returns

app_test with value [8.52544, 8.52537]

app_dev with value [8.52544, 8.52537]

How can i differentiate while setting the value to the column?

Update:

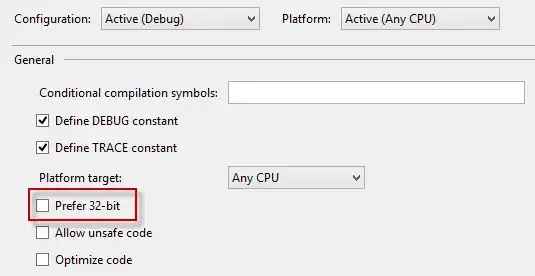

val df = spark.read

.format("com.databricks.spark.xml")

.option("rootTag", "appRoot")

.option("rowTag", "applist")

.load("test.xml")

df.printSchema()

root

|-- app: array (nullable = true)

| |-- element: struct (containsNull = true)

| | |-- _type: string (nullable = true)

| | |-- code: double (nullable = true)