Real Resilience with RSocket

RSocket, as a network protocol, has resilience as the first-class citizen. In RSocket, the Resilience property is exposed in 2 ways:

Resilience via flow-control (a.k.a Backpressure)

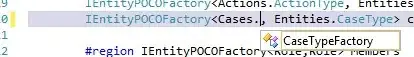

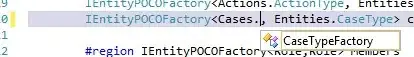

If you do streaming, your subscriber can control the number of elements being delivered, so your subscriber will not be overwhelmed by a server. The animation below shows how reactive-streams spec is implemented on the RSocket protocol level:

As it might be noticed, as in the Reactive Streams, the Subscriber (lefthand side) requests data via its Subscription, this request is transformed into a binary frame, sent via the network, and once the receiver receives that frame, it decodes it and then delivers to the corresponding subscription on the remote site so that the remote Publisher can produce the exact number of messages.

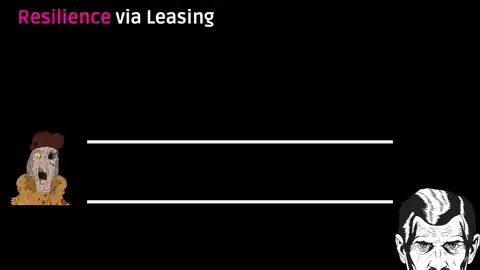

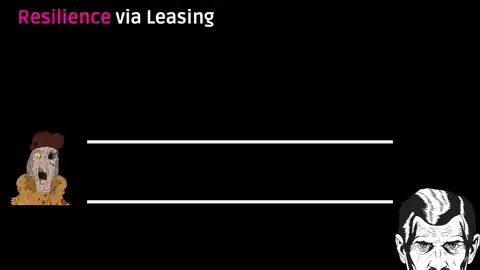

Resilience via Leasing

On the other hand, along with streaming, the server, which usually manages multiple connections, has to stand toward the load, and in case of failure, it should be able to prevent any further interactions. For that purpose, RSocket brings a built-in protocol feature, called Leasing. In a nutshell, Leasing is a built-in into protocol Rate Limiting, where the request limit is something dynamic and absolutely controlled by the Responder side.

There are a few phrases that might be distinguished in that process:

- Setup phase - this phase happens when a Client connects to a server, and both of the sides have to provide particular flags to agree that both of them are ready to respect Leasing.

- Silence phase - at that phase, a Requester can not do anything. There is a strict relationship - Request is not allowed to do anything unless the Responder allows doing so. If a Requester tries to send any requests, such requests will fail immediately without sending any frame to the remote.

- Lease provisioning phase - once a Responder agreed on its capacity and ready to receive requests from the Requester, it sends a specific frame called

Lease. That frame contains 2 crucial values: Number of Requests and Time to Live. The first value says to a Requester the number of requests it can send to the Responder. The second value says how long such an allowance is valid. So if a Requester has not used all of them by the time, such allowance will be treated as invalid, and any further requests will be rejected on the Requester sied.

This interaction is depicted in the following animation:

Note

Lease strategy works on a per connection basis, which means that if you issue a lease, you issue it for a single particular remote Requester, and not for all requesters connected to your server. On the other hand, math can be applied to share the whole server capacity between all connected Requesters depends on some metrics, etc.

Where to find an example of both

There are a couple of good samples that demonstrate how flow-control and leasing can be used with RSocket. All of them can be found at the official git repo of the RSocket-Java project here