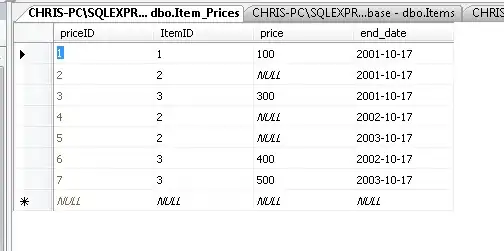

I am using Scrapy with Selenium in order to scrape urls from a particular search engine (ekoru). Here is a screenshot of the response I get back from the search engine with just ONE request:

Since I am using selenium, I'd assume that my user-agent should be fine so what else could the issue be that makes the search engine detect the bot immediately?

Here is my code:

class CompanyUrlSpider(scrapy.Spider):

name = 'company_url'

def start_requests(self):

yield SeleniumRequest(

url='https://ekoru.org',

wait_time=3,

screenshot=True,

callback=self.parseEkoru

)

def parseEkoru(self, response):

driver = response.meta['driver']

search_input = driver.find_element_by_xpath("//input[@id='fld_q']")

search_input.send_keys('Hello World')

search_input.send_keys(Keys.ENTER)

html = driver.page_source

response_obj = Selector(text=html)

links = response_obj.xpath("//div[@class='serp-result-web-title']/a")

for link in links:

yield {

'ekoru_URL': link.xpath(".//@href").get()

}