For example, I have a list (it can also be a numpy.array or anything else, I just wonder a faster way and I don't care the data type) ['a','b','c','d'], and I want to get ['ab','bc','cd'].

Of course there's a simple solution such as:

letters = list('abcdefghijk')

my_list = [letters[i:i+2] for i in range(len(letters)-1)]

And the my_list is :

[['a', 'b'],

['b', 'c'],

['c', 'd'],

['d', 'e'],

['e', 'f'],

['f', 'g'],

['g', 'h'],

['h', 'i'],

['i', 'j'],

['j', 'k']]

But I wonder is there any faster way to do this through numpy or something else, since I want to do this with a relatively big data, and therefore a simple for loop may be costly.

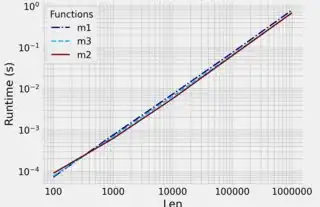

UPDATE Let me sumarrize the answers below now. Very much thanks to the answers so far from @Ehsan and @Andy L. I've done a very simple test of all their solutions with a relatively bigger data through the codes below:

import time

import numpy as np

from numba import njit

import matplotlib.pyplot as plt

from numpy.lib.stride_tricks import as_strided

def m1(letters):

return [letters[i]+letters[i+1] for i in range(len(letters)-1)]

@njit

def m2(letters):

return [letters[i]+letters[i+1] for i in range(len(letters)-1)]

def m3(letters):

return np.char.add(letters[:-1], letters[1:])

def m4(letters):

n = len(letters) - 1

m = np.array(letters[0]).itemsize

arr = as_strided(letters, shape=(n, 2), strides=(m, m))

return arr

def test_time(testfunc,args):

start = time.time()

testfunc(*args)

return time.time() - start

# test

ns = [10000, 100000, 1000000, 10000000]

timecost = []

for n in ns:

input_=np.random.choice(list('abcdefghijklmnopqrstuvwxyz'), size=n)

cost = [test_time(testfunc, [input_]) for testfunc in [m1, m2, m3,m4]]

timecost.append(cost)

# result plot

timecost = np.array(timecost)

labels = ['normal','numba.njit','numpy.char.add','numpy.lib.stride_tricks.as_strided']

for i in range(timecost.shape[-1]):

plt.plot(ns, timecost[:, i], label=labels[i])

plt.legend()

plt.savefig('result.png')

The result shows that with np.char.add and numba, the performance does work better than the normal method on bigger data, but the performance of stride_tricks is even better: the time cost decreases more than two orders of magnitudes.