I have a big data frame which contains 37,000,000 rows and a dictionary. For each key in the dictionary, the first column of the data frame is searched and if any row is equal to the key, the value of the key will be added to the second column of the data frame. Here is a small example:

dictionary = {'a':'asfg', 'b': 'jkh', 'c': 'jhjf' }

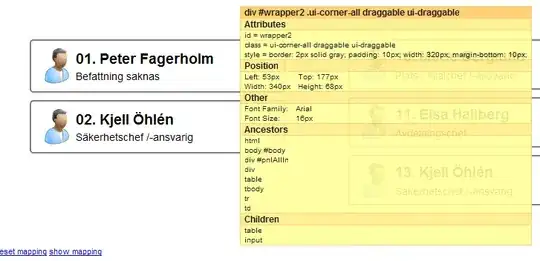

The data frame:

The resulted data frame:

The following for-loop takes a very long time to fill the second column of the data frame.

for key in dictionary:

df.loc[df[1] == key, ‘column2’] = dictionary[key]

Next, I tried setting an index to the first column of the data frame to speed up the searching, but it is not fast enough yet:

df1 = df.set_index([1])

for key in dictionary:

df1.loc[key, ‘column2’] = dictionary[key]

Finally, I used multiprocessing to speed up this process, but it is not optimal yet. Is there any faster way to do this?