In setup for a collaborative filtering model on the MovieLens100k dataset in a Jupyter notebook, I'd like to show a dense crosstab of users vs movies. I figure the best way to do this is to show the most frequent n user against the most frequent m movie.

If you'd like to run it in a notebook, you should be able to copy/paste this after installing the fastai2 dependencies (it exports pandas among other internal libraries)

from fastai2.collab import *

from fastai2.tabular.all import *

path = untar_data(URLs.ML_100k)

# load the ratings from csv

ratings = pd.read_csv(path/'u.data', delimiter='\t', header=None,

names=['user','movie','rating','timestamp'])

# show a sample of the format

ratings.head(10)

# slice the most frequent n=20 users and movies

most_frequent_users = list(ratings.user.value_counts()[:20])

most_rated_movies = list(ratings.movie.value_counts()[:20])

denser_ratings = ratings[ratings.user.isin(most_frequent_users)]

denser_movies = ratings[ratings.movie.isin(most_rated_movies)]

# crosstab the most frequent users and movies, showing the ratings

pd.crosstab(denser_ratings.user, denser_movies.movie, values=ratings.rating, aggfunc='mean').fillna('-')

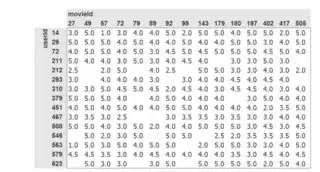

The desired output is much denser than what I've done. My example seems to be a little bit better than random, but not by much. I have two hypothesis to why it's not as dense as I want:

- The most frequent users might not always rate the most rated movies.

- My code has a bug which is making it index into the dataframe incorrectly for what I think I'm doing

Please let me know if you see an error in how I'm selecting the most frequent users and movies, or grabbing the matches with isin.

If that is correct (or really, regardless) - I'd like to see how I would make a denser set of users and movies to crosstab. The next approach I've thought of is to grab the most frequent movies, and select the most frequent users from that dataframe instead of the global dataset. But I'm unsure how I'd do that- between searching for the most frequent user across all the top m movies, or somehow more generally finding the set of n*m most-linked users and movies.

I will post my code if I solve it before better answers arrive.