I'm testing a c code for Linux with large arrays to measure thread performance, the application scales very well when threads are increased until max cores (8 for Intel 4770), but this is only for the pure math part of my code.

If I add the printf part for resulted arrays then the times becomes too large, from few seconds to several minutes even if redirected to a file, when printf those arrays should add just a few seconds.

The code:

(gcc 7.5.0-Ubuntu 18.04)

without printf loop:

gcc -O3 -m64 exp_multi.c -pthread -lm

with printf loop:

gcc -DPRINT_ARRAY -O3 -m64 exp_multi.c -pthread -lm

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#include <pthread.h>

#define MAXSIZE 1000000

#define REIT 100000

#define XXX -5

#define num_threads 8

static double xv[MAXSIZE];

static double yv[MAXSIZE];

/* gcc -O3 -m64 exp_multi.c -pthread -lm */

void* run(void *received_Val){

int single_val = *((int *) received_Val);

int r;

int i;

double p;

for (r = 0; r < REIT; r++) {

p = XXX + 0.00001*single_val*MAXSIZE/num_threads;

for (i = single_val*MAXSIZE/num_threads; i < (single_val+1)*MAXSIZE/num_threads; i++) {

xv[i]=p;

yv[i]=exp(p);

p += 0.00001;

}

}

return 0;

}

int main(){

int i;

pthread_t tid[num_threads];

for (i=0;i<num_threads;i++){

int *arg = malloc(sizeof(*arg));

if ( arg == NULL ) {

fprintf(stderr, "Couldn't allocate memory for thread arg.\n");

exit(1);

}

*arg = i;

pthread_create(&(tid[i]), NULL, run, arg);

}

for(i=0; i<num_threads; i++)

{

pthread_join(tid[i], NULL);

}

#ifdef PRINT_ARRAY

for (i=0;i<MAXSIZE;i++){

printf("x=%.20lf, e^x=%.20lf\n",xv[i],yv[i]);

}

#endif

return 0;

}

malloc in pthread_create passing an integer as the last argument as suggested in this post.

I tried, without success, clang, add free(tid) instruction, avoid using malloc instruction, reverse loops, only 1 unidimensional array, 1 thread version without pthread.

EDIT2: I think the exp function is processor resource intensive, probably affected by the per core cache or SIMD resources implemented by the processor generation. The following sample code is based on a licensed code posted on Stack Overflow.

This code runs fast with or without the printf loop, and exp from math.h has been improved a few years ago, it can be around x40 faster, at least on the Intel 4770 (Haswell), this link is a known test code for math library vs SSE2, and now exp speed of math should be close to the AVX2 algorithm optimized for float and x8 parallel calculations.

Test results: expf vs other SSE2 algortihm (exp_ps):

sinf .. -> 55.5 millions of vector evaluations/second -> 12 cycles/value

cosf .. -> 57.3 millions of vector evaluations/second -> 11 cycles/value

sincos (x87) .. -> 9.1 millions of vector evaluations/second -> 71 cycles/value

expf .. -> 61.4 millions of vector evaluations/second -> 11 cycles/value

logf .. -> 55.6 millions of vector evaluations/second -> 12 cycles/value

cephes_sinf .. -> 52.5 millions of vector evaluations/second -> 12 cycles/value

cephes_cosf .. -> 41.9 millions of vector evaluations/second -> 15 cycles/value

cephes_expf .. -> 18.3 millions of vector evaluations/second -> 35 cycles/value

cephes_logf .. -> 20.2 millions of vector evaluations/second -> 32 cycles/value

sin_ps .. -> 54.1 millions of vector evaluations/second -> 12 cycles/value

cos_ps .. -> 54.8 millions of vector evaluations/second -> 12 cycles/value

sincos_ps .. -> 54.6 millions of vector evaluations/second -> 12 cycles/value

exp_ps .. -> 42.6 millions of vector evaluations/second -> 15 cycles/value

log_ps .. -> 41.0 millions of vector evaluations/second -> 16 cycles/value

/* Performance test exp(x) algorithm

based on AVX implementation of Giovanni Garberoglio

Copyright (C) 2020 Antonio R.

AVX implementation of exp:

Modified code from this source: https://github.com/reyoung/avx_mathfun

Based on "sse_mathfun.h", by Julien Pommier

http://gruntthepeon.free.fr/ssemath/

Copyright (C) 2012 Giovanni Garberoglio

Interdisciplinary Laboratory for Computational Science (LISC)

Fondazione Bruno Kessler and University of Trento

via Sommarive, 18

I-38123 Trento (Italy)

This software is provided 'as-is', without any express or implied

warranty. In no event will the authors be held liable for any damages

arising from the use of this software.

Permission is granted to anyone to use this software for any purpose,

including commercial applications, and to alter it and redistribute it

freely, subject to the following restrictions:

1. The origin of this software must not be misrepresented; you must not

claim that you wrote the original software. If you use this software

in a product, an acknowledgment in the product documentation would be

appreciated but is not required.

2. Altered source versions must be plainly marked as such, and must not be

misrepresented as being the original software.

3. This notice may not be removed or altered from any source distribution.

(this is the zlib license)

*/

/* gcc -O3 -m64 -Wall -mavx2 -march=haswell expc.c -lm */

#include <stdio.h>

#include <immintrin.h>

#include <math.h>

#define MAXSIZE 1000000

#define REIT 100000

#define XXX -5

__m256 exp256_ps(__m256 x) {

/*

To increase the compatibility across different compilers the original code is

converted to plain AVX2 intrinsics code without ingenious macro's,

gcc style alignment attributes etc.

Moreover, the part "express exp(x) as exp(g + n*log(2))" has been significantly simplified.

This modified code is not thoroughly tested!

*/

__m256 exp_hi = _mm256_set1_ps(88.3762626647949f);

__m256 exp_lo = _mm256_set1_ps(-88.3762626647949f);

__m256 cephes_LOG2EF = _mm256_set1_ps(1.44269504088896341f);

__m256 inv_LOG2EF = _mm256_set1_ps(0.693147180559945f);

__m256 cephes_exp_p0 = _mm256_set1_ps(1.9875691500E-4);

__m256 cephes_exp_p1 = _mm256_set1_ps(1.3981999507E-3);

__m256 cephes_exp_p2 = _mm256_set1_ps(8.3334519073E-3);

__m256 cephes_exp_p3 = _mm256_set1_ps(4.1665795894E-2);

__m256 cephes_exp_p4 = _mm256_set1_ps(1.6666665459E-1);

__m256 cephes_exp_p5 = _mm256_set1_ps(5.0000001201E-1);

__m256 fx;

__m256i imm0;

__m256 one = _mm256_set1_ps(1.0f);

x = _mm256_min_ps(x, exp_hi);

x = _mm256_max_ps(x, exp_lo);

/* express exp(x) as exp(g + n*log(2)) */

fx = _mm256_mul_ps(x, cephes_LOG2EF);

fx = _mm256_round_ps(fx, _MM_FROUND_TO_NEAREST_INT |_MM_FROUND_NO_EXC);

__m256 z = _mm256_mul_ps(fx, inv_LOG2EF);

x = _mm256_sub_ps(x, z);

z = _mm256_mul_ps(x,x);

__m256 y = cephes_exp_p0;

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p1);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p2);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p3);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p4);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p5);

y = _mm256_mul_ps(y, z);

y = _mm256_add_ps(y, x);

y = _mm256_add_ps(y, one);

/* build 2^n */

imm0 = _mm256_cvttps_epi32(fx);

imm0 = _mm256_add_epi32(imm0, _mm256_set1_epi32(0x7f));

imm0 = _mm256_slli_epi32(imm0, 23);

__m256 pow2n = _mm256_castsi256_ps(imm0);

y = _mm256_mul_ps(y, pow2n);

return y;

}

int main(){

int r;

int i;

float p;

static float xv[MAXSIZE];

static float yv[MAXSIZE];

float *xp;

float *yp;

for (r = 0; r < REIT; r++) {

p = XXX;

xp = xv;

yp = yv;

for (i = 0; i < MAXSIZE; i += 8) {

__m256 x = _mm256_setr_ps(p, p + 0.00001, p + 0.00002, p + 0.00003, p + 0.00004, p + 0.00005, p + 0.00006, p + 0.00007);

__m256 y = exp256_ps(x);

_mm256_store_ps(xp,x);

_mm256_store_ps(yp,y);

xp += 8;

yp += 8;

p += 0.00008;

}

}

for (i=0;i<MAXSIZE;i++){

printf("x=%.20f, e^x=%.20f\n",xv[i],yv[i]);

}

return 0;

}

For comparison, this is the code example with exp (x) from the math library, single thread and float.

#include <stdio.h>

#include <math.h>

#define MAXSIZE 1000000

#define REIT 100000

#define XXX -5

/* gcc -O3 -m64 exp_st.c -lm */

int main(){

int r;

int i;

float p;

static float xv[MAXSIZE];

static float yv[MAXSIZE];

for (r = 0; r < REIT; r++) {

p = XXX;

for (i = 0; i < MAXSIZE; i++) {

xv[i]=p;

yv[i]=expf(p);

p += 0.00001;

}

}

for (i=0;i<MAXSIZE;i++){

printf("x=%.20f, e^x=%.20f\n",xv[i],yv[i]);

}

return 0;

}

SOLUTION: As Andreas Wenzel said, the gcc compiler is smart enough and it decides that it is not necessary to actually write the results to the array, these writes are optimized away by the compiler. After new performance tests I made based on new information, or before I committed several mistakes or I assumed wrong facts, it seems the results are clearer: exp (double arg), or expf( float arg) which is x2+ exp(double arg), have been improved but it is not as a fast AVX2 algorithm (x8 parallel float arg), which is around x6 faster than SSE2 algorithm (x4 parallel float arg). Here are some results, as expected for Intel Hyper-Threading CPUs, excepts for SSE2 algorithm:

exp (double arg) single thread: 18 min 46 sec

exp (double arg) 4 threads: 5 min 4 sec

exp (double arg) 8 threads: 4 min 28 sec

expf (float arg) single thread: 7 min 32 sec

expf (float arg) 4 threads: 1 min 58 sec

expf (float arg) 8 threads: 1 min 41 sec

Relative error**:

i x y = expf(x) double precision exp relative error

i = 0 x =-5.000000000e+00 y = 6.737946998e-03 exp_dbl = 6.737946999e-03 rel_err =-1.124224480e-10

i = 124000 x =-3.758316040e+00 y = 2.332298271e-02 exp_dbl = 2.332298229e-02 rel_err = 1.803005727e-08

i = 248000 x =-2.518329620e+00 y = 8.059411496e-02 exp_dbl = 8.059411715e-02 rel_err =-2.716802480e-08

i = 372000 x =-1.278343201e+00 y = 2.784983218e-01 exp_dbl = 2.784983343e-01 rel_err =-4.490403948e-08

i = 496000 x =-3.867173195e-02 y = 9.620664716e-01 exp_dbl = 9.620664730e-01 rel_err =-1.481617428e-09

i = 620000 x = 1.201261759e+00 y = 3.324308872e+00 exp_dbl = 3.324308753e+00 rel_err = 3.571995830e-08

i = 744000 x = 2.441616058e+00 y = 1.149159718e+01 exp_dbl = 1.149159684e+01 rel_err = 2.955980805e-08

i = 868000 x = 3.681602478e+00 y = 3.970997620e+01 exp_dbl = 3.970997748e+01 rel_err =-3.232306688e-08

i = 992000 x = 4.921588898e+00 y = 1.372204742e+02 exp_dbl = 1.372204694e+02 rel_err = 3.563072184e-08

*SSE2 algorithm by Julien Pommier, x6,8 speed increase from one thread to 8 threads. My performance test code uses aligned(16) for typedef union of vector/4 float array passed to the library, instead of aligned whole float array. This may causes lower performance, at least for other AVX2 code, its performance improvement with multithread too seems good for Intel Hyper-Threading but at a lower speed, time increased between x2.5-x1.5. Maybe SSE2 code could be sped up with better array alignment which I couldn't improve:

exp_ps (x4 parallel float arg) single thread: 12 min 7 sec

exp_ps (x4 parallel float arg) 4 threads: 3 min 10 sec

exp_ps (x4 parallel float arg) 8 threads: 1 min 46 sec

Relative error**:

i x y = exp_ps(x) double precision exp relative error

i = 0 x =-5.000000000e+00 y = 6.737946998e-03 exp_dbl = 6.737946999e-03 rel_err =-1.124224480e-10

i = 124000 x =-3.758316040e+00 y = 2.332298271e-02 exp_dbl = 2.332298229e-02 rel_err = 1.803005727e-08

i = 248000 x =-2.518329620e+00 y = 8.059412241e-02 exp_dbl = 8.059411715e-02 rel_err = 6.527768787e-08

i = 372000 x =-1.278343201e+00 y = 2.784983218e-01 exp_dbl = 2.784983343e-01 rel_err =-4.490403948e-08

i = 496000 x =-3.977407143e-02 y = 9.610065222e-01 exp_dbl = 9.610065335e-01 rel_err =-1.174323454e-08

i = 620000 x = 1.200158238e+00 y = 3.320642233e+00 exp_dbl = 3.320642334e+00 rel_err =-3.054731957e-08

i = 744000 x = 2.441616058e+00 y = 1.149159622e+01 exp_dbl = 1.149159684e+01 rel_err =-5.342903415e-08

i = 868000 x = 3.681602478e+00 y = 3.970997620e+01 exp_dbl = 3.970997748e+01 rel_err =-3.232306688e-08

i = 992000 x = 4.921588898e+00 y = 1.372204742e+02 exp_dbl = 1.372204694e+02 rel_err = 3.563072184e-08

AVX2 algorithm (x8 parallel float arg) single thread: 1 min 45 sec

AVX2 algorithm (x8 parallel float arg) 4 threads: 28 sec

AVX2 algorithm (x8 parallel float arg) 8 threads: 27 sec

Relative error**:

i x y = exp256_ps(x) double precision exp relative error

i = 0 x =-5.000000000e+00 y = 6.737946998e-03 exp_dbl = 6.737946999e-03 rel_err =-1.124224480e-10

i = 124000 x =-3.758316040e+00 y = 2.332298271e-02 exp_dbl = 2.332298229e-02 rel_err = 1.803005727e-08

i = 248000 x =-2.516632080e+00 y = 8.073104918e-02 exp_dbl = 8.073104510e-02 rel_err = 5.057888540e-08

i = 372000 x =-1.279417157e+00 y = 2.781994045e-01 exp_dbl = 2.781993997e-01 rel_err = 1.705288467e-08

i = 496000 x =-3.954863176e-02 y = 9.612231851e-01 exp_dbl = 9.612232069e-01 rel_err =-2.269774967e-08

i = 620000 x = 1.199879169e+00 y = 3.319715738e+00 exp_dbl = 3.319715775e+00 rel_err =-1.119642824e-08

i = 744000 x = 2.440370798e+00 y = 1.147729492e+01 exp_dbl = 1.147729571e+01 rel_err =-6.896860199e-08

i = 868000 x = 3.681602478e+00 y = 3.970997620e+01 exp_dbl = 3.970997748e+01 rel_err =-3.232306688e-08

i = 992000 x = 4.923286438e+00 y = 1.374535980e+02 exp_dbl = 1.374536045e+02 rel_err =-4.676466368e-08

**The relative errors are the same, since the codes with SSE2 and AVX2 use identical algorithms, and it is more than likely that it is also that of the library function exp(x).

Source code AVX2 algorithm multithread

/* Performance test of a multithreaded exp(x) algorithm

based on AVX implementation of Giovanni Garberoglio

Copyright (C) 2020 Antonio R.

AVX implementation of exp:

Modified code from this source: https://github.com/reyoung/avx_mathfun

Based on "sse_mathfun.h", by Julien Pommier

http://gruntthepeon.free.fr/ssemath/

Copyright (C) 2012 Giovanni Garberoglio

Interdisciplinary Laboratory for Computational Science (LISC)

Fondazione Bruno Kessler and University of Trento

via Sommarive, 18

I-38123 Trento (Italy)

This software is provided 'as-is', without any express or implied

warranty. In no event will the authors be held liable for any damages

arising from the use of this software.

Permission is granted to anyone to use this software for any purpose,

including commercial applications, and to alter it and redistribute it

freely, subject to the following restrictions:

1. The origin of this software must not be misrepresented; you must not

claim that you wrote the original software. If you use this software

in a product, an acknowledgment in the product documentation would be

appreciated but is not required.

2. Altered source versions must be plainly marked as such, and must not be

misrepresented as being the original software.

3. This notice may not be removed or altered from any source distribution.

(this is the zlib license)

*/

/* gcc -O3 -m64 -mavx2 -march=haswell expc_multi.c -pthread -lm */

#include <stdio.h>

#include <stdlib.h>

#include <immintrin.h>

#include <math.h>

#include <pthread.h>

#define MAXSIZE 1000000

#define REIT 100000

#define XXX -5

#define num_threads 4

typedef float FLOAT32[MAXSIZE] __attribute__((aligned(4)));

static FLOAT32 xv;

static FLOAT32 yv;

__m256 exp256_ps(__m256 x) {

/*

To increase the compatibility across different compilers the original code is

converted to plain AVX2 intrinsics code without ingenious macro's,

gcc style alignment attributes etc.

Moreover, the part "express exp(x) as exp(g + n*log(2))" has been significantly simplified.

This modified code is not thoroughly tested!

*/

__m256 exp_hi = _mm256_set1_ps(88.3762626647949f);

__m256 exp_lo = _mm256_set1_ps(-88.3762626647949f);

__m256 cephes_LOG2EF = _mm256_set1_ps(1.44269504088896341f);

__m256 inv_LOG2EF = _mm256_set1_ps(0.693147180559945f);

__m256 cephes_exp_p0 = _mm256_set1_ps(1.9875691500E-4);

__m256 cephes_exp_p1 = _mm256_set1_ps(1.3981999507E-3);

__m256 cephes_exp_p2 = _mm256_set1_ps(8.3334519073E-3);

__m256 cephes_exp_p3 = _mm256_set1_ps(4.1665795894E-2);

__m256 cephes_exp_p4 = _mm256_set1_ps(1.6666665459E-1);

__m256 cephes_exp_p5 = _mm256_set1_ps(5.0000001201E-1);

__m256 fx;

__m256i imm0;

__m256 one = _mm256_set1_ps(1.0f);

x = _mm256_min_ps(x, exp_hi);

x = _mm256_max_ps(x, exp_lo);

/* express exp(x) as exp(g + n*log(2)) */

fx = _mm256_mul_ps(x, cephes_LOG2EF);

fx = _mm256_round_ps(fx, _MM_FROUND_TO_NEAREST_INT |_MM_FROUND_NO_EXC);

__m256 z = _mm256_mul_ps(fx, inv_LOG2EF);

x = _mm256_sub_ps(x, z);

z = _mm256_mul_ps(x,x);

__m256 y = cephes_exp_p0;

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p1);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p2);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p3);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p4);

y = _mm256_mul_ps(y, x);

y = _mm256_add_ps(y, cephes_exp_p5);

y = _mm256_mul_ps(y, z);

y = _mm256_add_ps(y, x);

y = _mm256_add_ps(y, one);

/* build 2^n */

imm0 = _mm256_cvttps_epi32(fx);

imm0 = _mm256_add_epi32(imm0, _mm256_set1_epi32(0x7f));

imm0 = _mm256_slli_epi32(imm0, 23);

__m256 pow2n = _mm256_castsi256_ps(imm0);

y = _mm256_mul_ps(y, pow2n);

return y;

}

void* run(void *received_Val){

int single_val = *((int *) received_Val);

int r;

int i;

float p;

float *xp;

float *yp;

for (r = 0; r < REIT; r++) {

p = XXX + 0.00001*single_val*MAXSIZE/num_threads;

xp = xv + single_val*MAXSIZE/num_threads;

yp = yv + single_val*MAXSIZE/num_threads;

for (i = single_val*MAXSIZE/num_threads; i < (single_val+1)*MAXSIZE/num_threads; i += 8) {

__m256 x = _mm256_setr_ps(p, p + 0.00001, p + 0.00002, p + 0.00003, p + 0.00004, p + 0.00005, p + 0.00006, p + 0.00007);

__m256 y = exp256_ps(x);

_mm256_store_ps(xp,x);

_mm256_store_ps(yp,y);

xp += 8;

yp += 8;

p += 0.00008;

}

}

return 0;

}

int main(){

int i;

pthread_t tid[num_threads];

for (i=0;i<num_threads;i++){

int *arg = malloc(sizeof(*arg));

if ( arg == NULL ) {

fprintf(stderr, "Couldn't allocate memory for thread arg.\n");

exit(1);

}

*arg = i;

pthread_create(&(tid[i]), NULL, run, arg);

}

for(i=0; i<num_threads; i++)

{

pthread_join(tid[i], NULL);

}

for (i=0;i<MAXSIZE;i++){

printf("x=%.20f, e^x=%.20f\n",xv[i],yv[i]);

}

return 0;

}

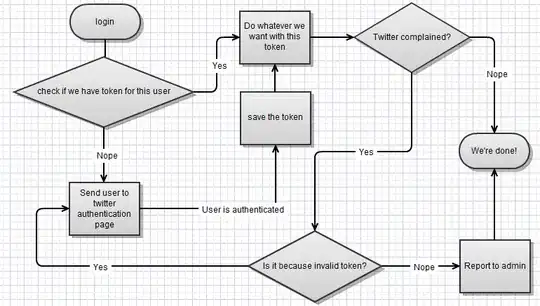

Charts overview:

exp (double arg) without printf loop, not real performance, as Andreas Wenzel found, gcc doesn't calculate exp(x) when results are not going to be printf, even float version is much slower because its different few assembly instructions. Although graph may be useful for some assembly algorithm that only uses low-level CPU cache/registers.

exp (double arg) without printf loop, not real performance, as Andreas Wenzel found, gcc doesn't calculate exp(x) when results are not going to be printf, even float version is much slower because its different few assembly instructions. Although graph may be useful for some assembly algorithm that only uses low-level CPU cache/registers.

expf (float arg) real performance or with printf loop

expf (float arg) real performance or with printf loop

AVX2 algorithm, the best performance.

AVX2 algorithm, the best performance.