I am learning C++ and I was toying with linked lists.

Consider the following extremely simple implementation:

static std::size_t n_constructors {0};

template <typename T>

class list {

public:

list(T n) : item{n} { n_constructors++; }

list(T n, list* l) : item{n}, next {l} { n_constructors++; }

list(const list&);

~list() { delete next; }

void insert(T n);

void print() const;

list reverse() const;

list reverse_iter() const;

private:

T item;

list* next {nullptr};

};

template <typename T>

list<T>::list(const list& src)

:

item {src.item}

{

n_constructors++;

if (src.next) {

next = new list {*src.next};

}

}

template <typename T>

void list<T>::insert(T n) {

if (next == nullptr)

next = new list {n};

else

next->insert(n);

}

template <typename T>

void list<T>::print() const {

std::cout << item << " ";

if (next)

next->print();

else

std::cout << std::endl;

}

template <typename T>

list<T> list<T>::reverse() const {

if (next) {

auto s = next->reverse();

s.insert(item);

return s;

} else {

return list {item};

}

}

template <typename T>

list<T> list<T>::reverse_iter() const {

auto sptr = new list<T> {item};

auto prev = next;

auto link = next;

while (link) {

auto tmp = new list<T>(link->item, sptr);

link = link->next;

prev = link;

sptr = tmp;

}

return *sptr;

}

As you can see, I wrote two reverse functions: an iterative one and a recursive one. To test them out, I tried this:

int main() {

list<int> s{1};

for (int i = 2; i < 10000; ++i)

s.insert(i);

std::cout << "initial constructor\n";

std::cout << "called " << n_constructors << " constructors.\n";

auto t0 = std::chrono::high_resolution_clock::now();

auto s2 = s.reverse_iter();

auto t = std::chrono::high_resolution_clock::now();

std::cout << "iterative reverse\n";

std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(t - t0).count() <<" ms\n";

std::cout << "called " << n_constructors << " constructors.\n";

t0 = std::chrono::high_resolution_clock::now();

auto s3 = s.reverse();

t = std::chrono::high_resolution_clock::now();

std::cout << "recursive reverse\n";

std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(t - t0).count() <<" ms\n";

std::cout << "called " << n_constructors << " constructors.\n";

}

here's a typical result of my benchmark (compiled with g++ 9.3.0, optimiser turned on with -O2):

initial constructor

called 9999 constructors.

iterative reverse

0 ms

called 29997 constructors.

recursive reverse

1692 ms

called 50034995 constructors.

The difference in performance is staggering. I guess that the problem with the recursive version is the much larger number of allocations, so I implement a move constructor:

template <typename T>

list<T>::list(list&& src)

:

item {src.item},

next {src.next}

{

n_constructors++;

src.next = nullptr;

src.item = 0;

}

This is the result:

initial constructor

called 9999 constructors.

iterative reverse

0 ms

called 29997 constructors.

recursive reverse

90 ms

called 49994 constructors.

Very good, now at least the recursive function does just as many allocations as the iterative function. The performance difference is however still large. I try again with 100 000 elements:

initial constructor

called 99999 constructors.

iterative reverse

7 ms

called 299997 constructors.

recursive reverse

9458 ms

called 499994 constructors.

In my opinion the recursive reverse is much more readable and elegant than the iterative one.

Why is the recursive version so much slower? Is there something I can do to make it faster?

Edit

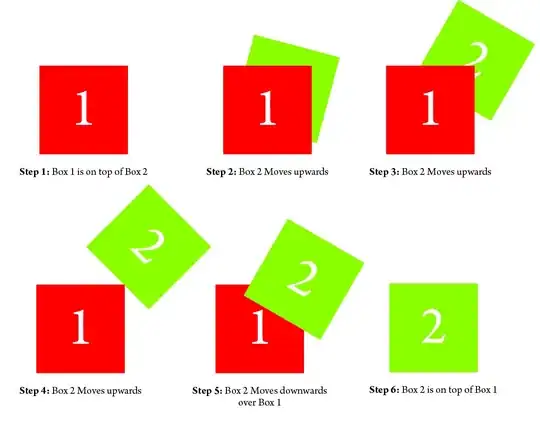

I add the plots with the empirical asymptotic order of growth for the two algorithms, as recommended by Will Ness in the comments.