I want to plot loss curves for my training and validation sets the same way as Keras does, but using Scikit. I have chosen the concrete dataset which is a Regression problem, the dataset is available at:

http://archive.ics.uci.edu/ml/machine-learning-databases/concrete/compressive/

So, I have converted the data to CSV and the first version of my program is the following:

Model 1

df=pd.read_csv("Concrete_Data.csv")

train,validate,test=np.split(df.sample(frac=1),[int(.8*len(df)),int(.90*len(df))])

Xtrain=train.drop(["ConcreteCompStrength"],axis="columns")

ytrain=train["ConcreteCompStrength"]

Xval=validate.drop(["ConcreteCompStrength"],axis="columns")

yval=validate["ConcreteCompStrength"]

mlp=MLPRegressor(activation="relu",max_iter=5000,solver="adam",random_state=2)

mlp.fit(Xtrain,ytrain)

plt.plot(mlp.loss_curve_,label="train")

mlp.fit(Xval,yval) #doubt

plt.plot(mlp.loss_curve_,label="validation") #doubt

plt.legend()

The resulting graph is the following:

In this model, I doubt if it's the correct marked part because as long as I know one should leave apart the validation or testing set, so maybe the fit function is not correct there. The score that I got is 0.95.

Model 2

In this model I try to use the validation score as follows:

df=pd.read_csv("Concrete_Data.csv")

train,validate,test=np.split(df.sample(frac=1),[int(.8*len(df)),int(.90*len(df))])

Xtrain=train.drop(["ConcreteCompStrength"],axis="columns")

ytrain=train["ConcreteCompStrength"]

Xval=validate.drop(["ConcreteCompStrength"],axis="columns")

yval=validate["ConcreteCompStrength"]

mlp=MLPRegressor(activation="relu",max_iter=5000,solver="adam",random_state=2,early_stopping=True)

mlp.fit(Xtrain,ytrain)

plt.plot(mlp.loss_curve_,label="train")

plt.plot(mlp.validation_scores_,label="validation") #line changed

plt.legend()

And for this model, I had to add the part of early stopping set to true and validation_scores_to be plotted, but the graph results are a little bit weird:

The score I get is 0.82, but I read that this occurs when the model finds it easier to predict the data in the validation set that in the train set. I believe that is because I am using the validation_scores_ part, but I was not able to find any online reference about this particular instruction.

How it will be the correct way to plot these loss curves for adjusting my hyperparameters in Scikit?

Update I have programmed the module as it was advise like this:

mlp=MLPRegressor(activation="relu",max_iter=1,solver="adam",random_state=2,early_stopping=True)

training_mse = []

validation_mse = []

epochs = 5000

for epoch in range(1,epochs):

mlp.fit(X_train, Y_train)

Y_pred = mlp.predict(X_train)

curr_train_score = mean_squared_error(Y_train, Y_pred) # training performances

Y_pred = mlp.predict(X_valid)

curr_valid_score = mean_squared_error(Y_valid, Y_pred) # validation performances

training_mse.append(curr_train_score) # list of training perf to plot

validation_mse.append(curr_valid_score) # list of valid perf to plot

plt.plot(training_mse,label="train")

plt.plot(validation_mse,label="validation")

plt.legend()

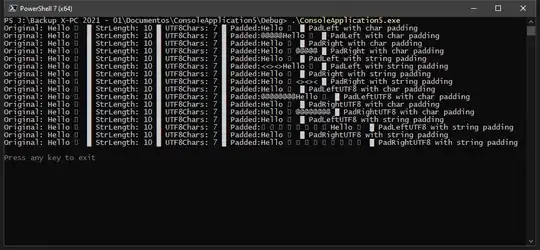

but the plot obtained are two flat lines:

It seems I am missing something here.