I am using sklearn to compute the average precision and roc_auc of a classifier and yellowbrick to plot the roc_auc and precision-recall curves. The problem is that the packages give different scores in both metrics and I do not know which one is the correct.

The code used:

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from yellowbrick.classifier import ROCAUC

from yellowbrick.classifier import PrecisionRecallCurve

from sklearn.datasets import make_classification

from sklearn.metrics import roc_auc_score

from sklearn.metrics import average_precision_score

seed = 42

# provides de data

X, y = make_classification(n_samples=1000, n_features=2, n_redundant=0,

n_informative=2, random_state=seed)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

clf_lr = LogisticRegression(random_state=seed)

clf_lr.fit(X_train, y_train)

y_pred = clf_lr.predict(X_test)

roc_auc = roc_auc_score(y_test, y_pred)

avg_precision = average_precision_score(y_test, y_pred)

print(f"ROC_AUC: {roc_auc}")

print(f"Average_precision: {avg_precision}")

print('='*20)

# visualizations

viz3 = ROCAUC(LogisticRegression(random_state=seed))

viz3.fit(X_train, y_train)

viz3.score(X_test, y_test)

viz3.show()

viz4 = PrecisionRecallCurve(LogisticRegression(random_state=seed))

viz4.fit(X_train, y_train)

viz4.score(X_test, y_test)

viz4.show()

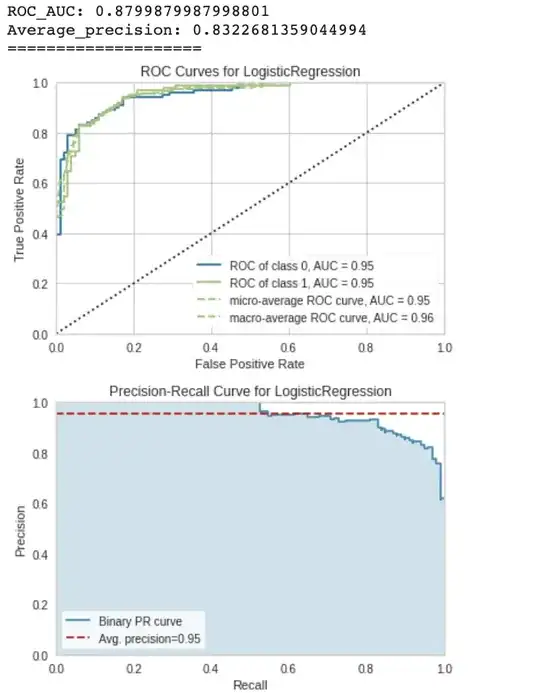

The code produces the following output:

As it can be seen above, the metrics give different values depending the package. In the print statement are the values computed by scikit-learn whereas in the plots appear annotated the values computed by yellowbrick.