I'm trying to implement Normal Mapping, using a simple cube that i created. I followed this tutorial https://learnopengl.com/Advanced-Lighting/Normal-Mapping but i can't really get how normal mapping should be done when drawing 3d objects, since the tutorial is using a 2d object.

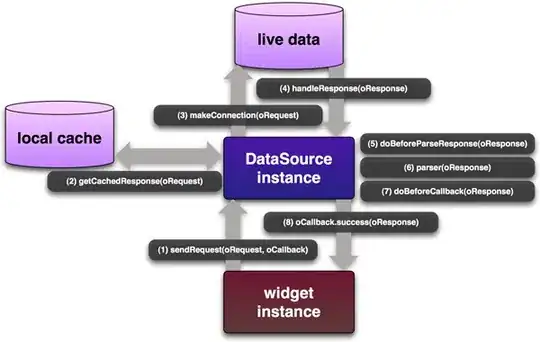

In particular, my cube seems almost correctly lighted but there's something i think it's not working how it should be. I'm using a geometry shader that will output green vector normals and red vector tangents, to help me out. Here i post three screenshot of my work.

Directly lighted

Side lighted

Here i actually tried calculating my normals and tangents in a different way. (quite wrong)

In the first image i calculate my cube normals and tangents one face at a time. This seems to work for the face, but if i rotate my cube i think the lighting on the adiacent face is wrong. As you can see in the second image, it's not totally absent.

In the third image, i tried summing all normals and tangents per vertex, as i think it should be done, but the result seems quite wrong, since there is too little lighting.

In the end, my question is how i should calculate normals and tangents. Should i consider per face calculations or sum vectors per vertex across all relative faces, or else?

EDIT --

I'm passing normal and tangent to the vertex shader and setting up my TBN matrix. But as you can see in the first image, drawing face by face my cube, it seems that those faces adjacent to the one i'm looking directly (that is well lighted) are not correctly lighted and i don't know why. I thought that i wasn't correctly calculating my 'per face' normal and tangent. I thought that calculating some normal and tangent that takes count of the object in general, could be the right way. If it's right to calculate normal and tangent as visible in the second image (green normal, red tangent) to set up the TBN matrix, why does the right face seems not well lighted?

EDIT 2 --

Vertex shader:

void main(){

texture_coordinates = textcoord;

fragment_position = vec3(model * vec4(position,1.0));

mat3 normalMatrix = transpose(inverse(mat3(model)));

vec3 T = normalize(normalMatrix * tangent);

vec3 N = normalize(normalMatrix * normal);

T = normalize(T - dot(T, N) * N);

vec3 B = cross(N, T);

mat3 TBN = transpose(mat3(T,B,N));

view_position = TBN * viewPos; // camera position

light_position = TBN * lightPos; // light position

fragment_position = TBN * fragment_position;

gl_Position = projection * view * model * vec4(position,1.0);

}

In the VS i set up my TBN matrix and i transform all light, fragment and view vectors to tangent space; doing so i won't have to do any other calculation in the fragment shader.

Fragment shader:

void main() {

vec3 Normal = texture(TextSamplerNormals,texture_coordinates).rgb; // extract normal

Normal = normalize(Normal * 2.0 - 1.0); // correct range

material_color = texture2D(TextSampler,texture_coordinates.st); // diffuse map

vec3 I_amb = AmbientLight.color * AmbientLight.intensity;

vec3 lightDir = normalize(light_position - fragment_position);

vec3 I_dif = vec3(0,0,0);

float DiffusiveFactor = max(dot(lightDir,Normal),0.0);

vec3 I_spe = vec3(0,0,0);

float SpecularFactor = 0.0;

if (DiffusiveFactor>0.0) {

I_dif = DiffusiveLight.color * DiffusiveLight.intensity * DiffusiveFactor;

vec3 vertex_to_eye = normalize(view_position - fragment_position);

vec3 light_reflect = reflect(-lightDir,Normal);

light_reflect = normalize(light_reflect);

SpecularFactor = pow(max(dot(vertex_to_eye,light_reflect),0.0),SpecularLight.power);

if (SpecularFactor>0.0) {

I_spe = DiffusiveLight.color * SpecularLight.intensity * SpecularFactor;

}

}

color = vec4(material_color.rgb * (I_amb + I_dif + I_spe),material_color.a);

}