I've run into some problems while trying to implement Stochastic Gradient Descent, and basically what is happening is that my cost is growing like crazy and I don't have a clue why.

MSE implementation:

def mse(x,y,w,b):

predictions = x @ w

summed = (np.square(y - predictions - b)).mean(0)

cost = summed / 2

return cost

Gradients:

def grad_w(y,x,w,b,n_samples):

return -y @ x / n_samples + x.T @ x @ w / n_samples + b * x.mean(0)

def grad_b(y,x,w,b,n_samples):

return -y.mean(0) + x.mean(0) @ w + b

SGD Implementation:

def stochastic_gradient_descent(X,y,w,b,learning_rate=0.01,iterations=500,batch_size =100):

length = len(y)

cost_history = np.zeros(iterations)

n_batches = int(length/batch_size)

for it in range(iterations):

cost =0

indices = np.random.permutation(length)

X = X[indices]

y = y[indices]

for i in range(0,length,batch_size):

X_i = X[i:i+batch_size]

y_i = y[i:i+batch_size]

w -= learning_rate*grad_w(y_i,X_i,w,b,length)

b -= learning_rate*grad_b(y_i,X_i,w,b,length)

cost = mse(X_i,y_i,w,b)

cost_history[it] = cost

if cost_history[it] <= 0.0052: break

return w, cost_history[:it]

Random Variables:

w_true = np.array([0.2, 0.5,-0.2])

b_true = -1

first_feature = np.random.normal(0,1,1000)

second_feature = np.random.uniform(size=1000)

third_feature = np.random.normal(1,2,1000)

arrays = [first_feature,second_feature,third_feature]

x = np.stack(arrays,axis=1)

y = x @ w_true + b_true + np.random.normal(0,0.1,1000)

w = np.asarray([0.0,0.0,0.0], dtype='float64')

b = 1.0

After running this:

theta,cost_history = stochastic_gradient_descent(x,y,w,b)

print('Final cost/MSE: {:0.3f}'.format(cost_history[-1]))

I Get that:

Final cost/MSE: 3005958172614261248.000

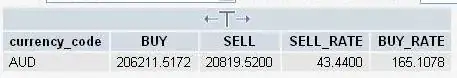

And here is the plot