Say you have a table where one column has repeat values. How can I add another column that shows how many times that value has shown up SO FAR (top-down).

Ex. You have a column say "ccode" and in ccode you have the value "R52" repeat twice. Rather than Join the final count (2), I want the first appearance of R52 to have a count=1, and the second to have a count=2, and so on...

CREATE TABLE Temp

(

ccode varchar(50),

name varchar(50),

Val1 varchar(50),

g_Name varchar(50),

ce_hybrid varchar(50)

)

INSERT INTO Temp VALUES

( 'R52' , 'adam@email.ca' , 1, 'WALT', '3P'),

( 'R52' , 'adam@email.ca' , 2 , 'KEN', '3P'),

( 'R00' , 'alison@email.ca' , 1 , 'QUIN', '3P')

SELECT ccode, name, [1_G_Name], [2_G_Name], [1_Hybrids], [2_Hybrids] FROM

(

SELECT ccode, name, col, val FROM(

SELECT *, Val1+'_G_Name' as Col, g_Name as Val FROM Temp

UNION

SELECT *, Val1+'_Hybrids' as Col, ce_hybrid as Val FROM Temp

) t

) tt

PIVOT ( max(val) for Col in ([1_G_Name], [2_G_Name], [1_Hybrids], [2_Hybrids]) ) AS pvt

For a better idea: http://sqlfiddle.com/#!18/6160d/2

I want to have a table like above, but add Val1 column afterwards (dynamically) based on the repeats SO FAR in the table (top-down).

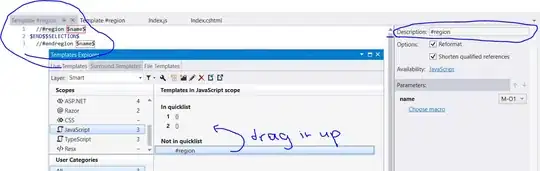

This output (image below) is CORRECT. But say my table didn't have Val1 column:

INSERT INTO Temp VALUES

( 'R52', 'adam@email.ca', 'WALT', '3P'),

( 'R52', 'adam@email.ca', 'KEN', '3P'),

( 'R00', 'alison@email.ca', 'QUIN', '3P')

How would I add Val1 column with the (1 , 2 , 1) to based on repeat count as I mentioned