I can see how to define a custom continuous random variable class in Scipy using the rv_continuous class or a discrete one using rv_discrete.

But is there any way to create a non-continuous random variable which can model the following random variable which is a combination of a normal distribution and a discontinuous distribution that simply outputs 0?

def my_rv(a, loc, scale):

if np.random.random() > a:

return 0

else:

return np.random.normal(loc=loc, scale=scale)

# Example

samples = np.array([my_rv(0.5,0,1) for i in range(1000)])

plt.hist(samples, bins=21, density=True)

plt.grid()

plt.show()

print(samples[:10].round(2))

# [0. 0. 0. 0. 0. 0. 2.2 0.07 0. 0.12]

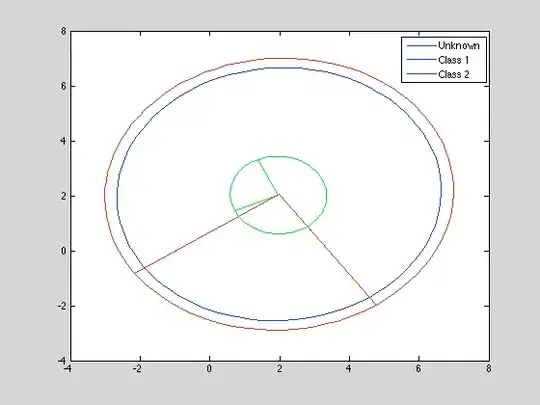

The pdf of this random variable looks something like this:

[In my actual application a is quite small, e.g. 0.01 so this variable is 0 most of the time].

Obviously, I can do this without Scipy in Python either with the simple function above or by generating samples from each distribution separately and then merging them appropriately but I was hoping to make this a Scipy custom random variable and get all the benefits and features of that class.