If you want to Azure data lake store in Spark, please refer to the following steps. Please note that I use the spark 3.0.1 with Hadoop 3.2 for test

- Create a Service Principal

az login

az ad sp create-for-rbac --name "myApp" --role contributor --scopes /subscriptions/<subscription-id>/resourceGroups/<group-name> --sdk-auth

- Grant Service Principal access to Data Lake

Connect-AzAccount

# get sp object id with sp's client id

$sp=Get-AzADServicePrincipal -ApplicationId 42e0d080-b1f3-40cf-8db6-c4c522d988c4

$fullAcl="user:$($sp.Id):rwx,default:user:$($sp.Id):rwx"

$newFullAcl = $fullAcl.Split("{,}")

Set-AdlStoreItemAclEntry -Account <> -Path / -Acl $newFullAcl -Recurse -Debug

- Code

string filePath =

$"adl://{<account name>}.azuredatalakestore.net/parquet/people.parquet";

// Create SparkSession

SparkSession spark = SparkSession

.Builder()

.AppName("Azure Data Lake Storage example using .NET for Apache Spark")

.Config("fs.adl.impl", "org.apache.hadoop.fs.adl.AdlFileSystem")

.Config("fs.adl.oauth2.access.token.provider.type", "ClientCredential")

.Config("fs.adl.oauth2.client.id", "<sp appid>")

.Config("fs.adl.oauth2.credential", "<sp password>")

.Config("fs.adl.oauth2.refresh.url", $"https://login.microsoftonline.com/<tenant>/oauth2/token")

.GetOrCreate();

// Create sample data

var data = new List<GenericRow>

{

new GenericRow(new object[] { 1, "John Doe"}),

new GenericRow(new object[] { 2, "Jane Doe"}),

new GenericRow(new object[] { 3, "Foo Bar"})

};

// Create schema for sample data

var schema = new StructType(new List<StructField>()

{

new StructField("Id", new IntegerType()),

new StructField("Name", new StringType()),

});

// Create DataFrame using data and schema

DataFrame df = spark.CreateDataFrame(data, schema);

// Print DataFrame

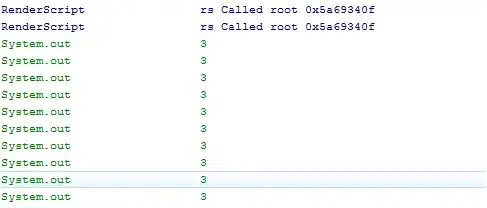

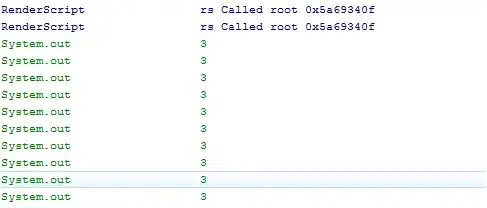

df.Show();

// Write DataFrame to Azure Data Lake Gen1

df.Write().Mode(SaveMode.Overwrite).Parquet(filePath);

// Read saved DataFrame from Azure Data Lake Gen1

DataFrame readDf = spark.Read().Parquet(filePath);

// Print DataFrame

readDf.Show();

// Stop Spark session

spark.Stop();

- Run

spark-submit ^

--packages org.apache.hadoop:hadoop-azure-datalake:3.2.0 ^

--class org.apache.spark.deploy.dotnet.DotnetRunner ^

--master local ^

microsoft-spark-3-0_2.12-<version>.jar ^

dotnet <application name>.dll

For more details, please refer to

https://learn.microsoft.com/en-us/azure/data-lake-store/data-lake-store-service-to-service-authenticate-using-active-directory

https://hadoop.apache.org/docs/current/hadoop-azure-datalake/index.html

https://learn.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control