I am new to off-heap storage in JVM, and ChronicleMap looks good for off heap things. But my main concern is related to performance mostly.

I did run simple test with config

ChronicleMapBuilder<IntValue, BondVOImpl> builder =

ChronicleMapBuilder.of(IntValue.class, BondVOImpl.class)

.minSegments(512)

.averageValue(new BondVOImpl())

.maxBloatFactor(4.0)

.valueMarshaller(new BytesMarshallableReaderWriter<>(BondVOImpl.class))

.entries(ITERATIONS);

and found following results

----- Concurrent HASHMAP ------------------------

Time for putting 7258

Time for getting 678

----- CHRONICLE MAP ------------------------

Time for putting 4704

Time for getting 2246

Read performance is quite low as compare to Concurrent HashMap.

I have tried with 1024/2048 segments, with default Bloat factor, with default Marshaller too. But still same results.

I'm only looking to take advantage of Off Heap feature to reduce GC Pauses and no intentions to use persistent thing or replication or using map beyond the JVM.

So the question is should I use ChronicleMap or stick with ConcurrentHashMap? Or there are any other configs which I can use to enhance performance in case of ChronicleMap?

Thanks in advance.

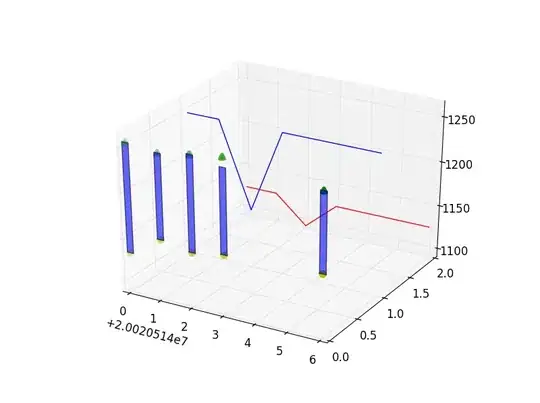

** Benchmarking using https://github.com/OpenHFT/Chronicle-Map/blob/master/src/test/java/net/openhft/chronicle/map/perf/MapJLBHTest.java :**