Although I am not sure how feasible it is doing this in real time for full screen, but if the window size is small, the text is large then it can be done faster, more accurate.

You need to set a timer to skip frames, recapture and rerun the OCR model to continuously process frames. To get more accurate results use a larger image or tune ocr parameters. If the window is in the background when capturing you need to bring it in front otherwise it will get wrong window with my code implementation.

A helpful implementation, Can python get the screen shot of a specific window?

This is my simple implementation for grabbing a single specified window and processing with EasyOCR. The text and output location will be printed on console.

Code

import pyscreenshot as ImageGrab

import pygetwindow as gw

import numpy as np

import easyocr

import cv2

# need to run only once to load model into memory

print("Loading Model")

reader = easyocr.Reader(['ch_sim','en'], gpu = True)

# Get by either window title, active window or window directly

print(gw.getAllTitles())

print(gw.getActiveWindow())

print(gw.getAllWindows())

# Provide desired window title manually or with title index of getAllTitles

tmp = gw.getWindowsWithTitle('untitled1 – test2.py PyCharm')

# Print window location, title

print(int(np.abs(tmp[0].left)), int(np.abs(tmp[0].top)), int(np.abs(tmp[0].right)), int(np.abs(tmp[0].bottom)))

print(tmp[0])

# grab fullscreen

#im = ImageGrab.grab()

# grab certain portion of selected window

print("Grabbing Window")

im = ImageGrab.grab(bbox=(int(np.abs(tmp[0].left)), int(np.abs(tmp[0].top)), int(np.abs(tmp[0].right)), int(np.abs(tmp[0].bottom)))) # X1,Y1,X2,Y2

# save image file and process that image

#im.save("window1.jpg")

#result = reader.readtext('window1.jpg')

# Resize the image to certain percent of original image

print("Resizing Image")

im_np = np.array(im)

scale_percent = 50

width = int(im_np.shape[1] * scale_percent / 100)

height = int(im_np.shape[0] * scale_percent / 100)

dim = (width, height)

im_resized = cv2.resize(im_np, dim, interpolation = cv2.INTER_AREA)

# process the grabbed image directly as numpy array

print("Processing with OCR")

result = reader.readtext(np.array(im_resized))

print(result)

# For each detected text draw bounding box in image and print text, location

i = 0

for r in result:

print("################################")

print(r[0][0], r[0][1], r[0][2], r[0][3])

print("FOUND:")

print(r[1])

print("################################")

x1=min(r[0][0][0], r[0][1][0], r[0][2][0], r[0][3][0])

x2=max(r[0][0][0], r[0][1][0], r[0][2][0], r[0][3][0])

y1=min(r[0][0][1], r[0][1][1], r[0][2][1], r[0][3][1])

y2=max(r[0][0][1], r[0][1][1], r[0][2][1], r[0][3][1])

image = cv2.rectangle(im_resized, (int(x1), int(y1)), (int(x2), int(y2)), (0, 255, 255), 3)

cv2.imshow("window_name", image)

cv2.waitKey(0)

cv2.imwrite("images/img_" + str(i) + ".jpg", image)

i += 1

Partial Console Output

################################

[409, 117] [465, 117] [465, 129] [409, 129]

FOUND:

r[u][1][u],

################################

################################

[469, 117] [525, 117] [525, 129] [469, 129]

FOUND:

r[u][2][0],

################################

################################

[529, 117] [587, 117] [587, 129] [529, 129]

FOUND:

r[u][][0]1

################################

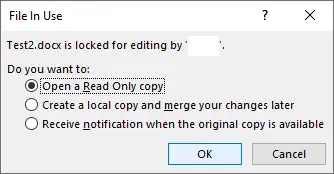

Image Output

There is no mention of platform or programming language implementation. Most libraries here claim to be cross platform. Look into these for getting window location, selected window, window control, screenshot.

https://github.com/asweigart/pyautogui

https://github.com/asweigart/pygetwindow

https://github.com/ponty/pyscreenshot

An easy python based OCR,

https://github.com/JaidedAI/EasyOCR