What about this :

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import hdbscan

df = pd.DataFrame(np.random.randint(0,20,size=(100, 4)), columns=list('ABCD'))

plt.rcParams['image.cmap'] = 'Paired'

A = df['A'] #X start

B = df['B'] #Y start

C = df['C'] #X arrive

D = df['D'] #Y arrive

clusterer = hdbscan.HDBSCAN()

df['LENGTH'] = np.sqrt(np.square(df.C-df.A) + np.square(df.D-df.B))

df['DIRECTION'] = np.degrees(np.arctan2(df.D-df.B, df.C-df.A))

coords = df[['LENGTH', 'DIRECTION']].values

clusterer.fit_predict(coords)

cluster_labels = clusterer.labels_

num_clusters = len(set(cluster_labels))

clusters = pd.DataFrame(

[(coords[cluster_labels==n], len(coords[cluster_labels==n])) for n in range(num_clusters)],

columns=["points", "weight"]

)

colors = {0:"green", 1:"blue", 2:"red", 3:"yellow", 4:"pink"}

df['CLUSTER'] = np.nan

for x, (cluster, weight) in enumerate(clusters[clusters.weight>0].values.tolist()):

df_this_cluster = pd.DataFrame(cluster, columns=['LENGTH', 'DIRECTION'])

df_this_cluster['TEMP'] = x

df = df.merge(df_this_cluster, on=['LENGTH', 'DIRECTION'], how='left')

ix = df[df.TEMP.notnull()].index

df.loc[ix, "CLUSTER"] = df.loc[ix, "TEMP"]

df.drop("TEMP", axis=1, inplace=True)

df['COLOR'] = df['CLUSTER'].map(colors).fillna('black')

fig,ax = plt.subplots()

ax.set_xlim(-5, 25)

ax.set_ylim(-5, 25)

ax.quiver(df.A, df.B, (df.C-df.A), (df.D-df.B), angles='xy', scale_units='xy', scale=1, alpha=0.5, color=df.COLOR)

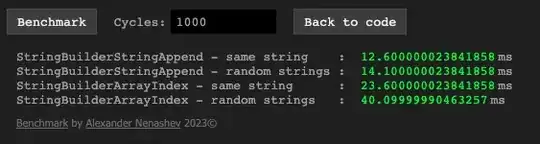

This will use clustering based on length and direction (direction being transformed to degrees, radians' small range doesn't match very well with the model on my first try).

I don't think this will be a very "cartesian" solution as the two values beeing analysed in the model are not in the same metrics... But the visual results are not so bad...

I did try another match based on the 4 coordinates, which is more rigorous. But it is (quite expectably) clustering the vectors by subareas of the space (when there are any) :

coords = df[['A', 'B', 'C', 'D']].values

clusterer.fit_predict(coords)

cluster_labels = clusterer.labels_

num_clusters = len(set(cluster_labels))

clusters = pd.DataFrame(

[(coords[cluster_labels==n], len(coords[cluster_labels==n])) for n in range(num_clusters)],

columns=["points", "weight"]

)

colors = {0:"green", 1:"blue", 2:"red", 3:"yellow", 4:"pink"}

df['CLUSTER'] = np.nan

for x, (cluster, weight) in enumerate(clusters[clusters.weight>0].values.tolist()):

df_this_cluster = pd.DataFrame(cluster, columns=['A', 'B', 'C', 'D'])

df_this_cluster['TEMP'] = x

df = df.merge(df_this_cluster, on=['A', 'B', 'C', 'D'], how='left')

ix = df[df.TEMP.notnull()].index

df.loc[ix, "CLUSTER"] = df.loc[ix, "TEMP"]

df.drop("TEMP", axis=1, inplace=True)

df['COLOR'] = df['CLUSTER'].map(colors).fillna('black')

EDIT

I gave it another try, based on the (very good) suggestion that angles are not a good variable given the fact that there are discontinuities around 0/2pi ; so I choose to use both sinuses and cosinuses instead. I also scaled the length (to have matching scales for the 3 variables) :

So the result would be :

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

from sklearn.preprocessing import robust_scale

import hdbscan

df = pd.DataFrame(np.random.randint(0,20,size=(100, 4)), columns=list('ABCD'))

plt.rcParams['image.cmap'] = 'Paired'

A = df['A'] #X start

B = df['B'] #Y start

C = df['C'] #X arrive

D = df['D'] #Y arrive

clusterer = hdbscan.HDBSCAN()

df['LENGTH'] = robust_scale(np.sqrt(np.square(df.C-df.A) + np.square(df.D-df.B)))

df['DIRECTION'] = np.arctan2(df.D-df.B, df.C-df.A)

df['COS'] = np.cos(df['DIRECTION'])

df['SIN'] = np.sin(df['DIRECTION'])

columns = ['LENGTH', 'COS', 'SIN']

clusterer = hdbscan.HDBSCAN()

values = df[columns].values

clusterer.fit_predict(values)

cluster_labels = clusterer.labels_

num_clusters = len(set(cluster_labels))

clusters = pd.DataFrame(

[(values[cluster_labels==n], len(values[cluster_labels==n])) for n in range(num_clusters)],

columns=["points", "weight"]

)

def get_cmap(n, name='hsv'):

'''

Returns a function that maps each index in 0, 1, ..., n-1 to a distinct

RGB color; the keyword argument name must be a standard mpl colormap name.

Credits to @Ali

https://stackoverflow.com/questions/14720331/how-to-generate-random-colors-in-matplotlib#answer-25628397

'''

return plt.cm.get_cmap(name, n)

cmap = get_cmap(num_clusters+1)

colors = {x:cmap(x) for x in range(num_clusters)}

df['CLUSTER'] = np.nan

for x, (cluster, weight) in enumerate(clusters[clusters.weight>0].values.tolist()):

df_this_cluster = pd.DataFrame(cluster, columns=columns)

df_this_cluster['TEMP'] = x

df = df.merge(df_this_cluster, on=columns, how='left')

df.reset_index(drop=True, inplace=True)

ix = df[df.TEMP.notnull()].index

df.loc[ix, "CLUSTER"] = df.loc[ix, "TEMP"]

df.drop("TEMP", axis=1, inplace=True)

df['CLUSTER'] = df['CLUSTER'].fillna(num_clusters-1)

df['COLOR'] = df['CLUSTER'].map(colors)

print("Number of clusters : ", num_clusters-1)

nrows = num_clusters//2 if num_clusters%2==0 else num_clusters//2 + 1

fig,axes = plt.subplots(nrows=nrows, ncols=2)

axes = [y for row in axes for y in row]

for k,ax in enumerate(axes):

ax.set_xlim(-5, 25)

ax.set_ylim(-5, 25)

ax.set_aspect('equal', adjustable='box')

if k+1 <num_clusters:

ax.set_title(f"CLUSTER #{k+1}", fontsize=10)

this_df = df[df.CLUSTER==k]

ax.quiver(

this_df.A, #X

this_df.B, #Y

(this_df.C-this_df.A), #X component of vector

(this_df.D-this_df.B), #Y component of vector

angles = 'xy',

scale_units = 'xy',

scale = 1,

color=this_df.COLOR

)

The results are way better (though it depends much of the input dataset) ; the last subplots refers to the vectors not being found to be inside a cluster:

Edit #2

If by "direction" you mean angle in the [0..pi[ interval (ie undirected vectors), you will want to include the following code before computing the cosinuses/sinuses :

ix = df[df.DIRECTION<0].index

df.loc[ix, "DIRECTION"] += np.pi