I am using the osmdata package to extract data from Open Street Map (OSM) and turn it into a sf object. Unfortunately, I have not found a way to get the encoding right using the functions of the osmdata and sf package. Currently, I am changing the encoding afterwards via the Encoding function, which is quite cumbersome because it involves a nested loop (over all data frames contained in the object returned from Open Street Map, and over all character columns within these data frames).

Is there a more generic, nicer way to get the encoding right?

The following code shows the problem. It extracts OSM data on pharmacy's in the German city Neumünster:

library(osmdata)

library(sf)

library(purrr)

results <- opq(bbox = "Neumünster, Germany") %>%

add_osm_feature(key = "amenity", value = "pharmacy") %>%

osmdata_sf()

pharmacy_points <- results$osm_points

head(pharmacy_points$addr.city)

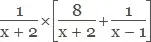

My locale and encoding is set as follows:

My current, but unsatisfactory, solution is the following:

encode_osm <- function(list){

# For all data frames in query result

for (df in (names(list)[map_lgl(list, is.data.frame)])) {

last <- length(list[[df]])

# For all columns except the last column ("geometry")

for (col in names(list[[df]])[-last]){

# Change the encoding to UTF8

Encoding(list[[df]][[col]]) <- "UTF-8"

}

}

return(list)

}

results_encoded <- encode_osm(results)