Weighted Least Squares can be computed in R using the command lm() with the weights option. To understand the formula, I computed them manually too but results are not the same:

# Sample from model

set.seed(123456789)

x1 <- 10 * rbeta(500, 2, 6)

x2 <- x1 + 2*rchisq(500, 3, 2) + rnorm(500)*sd(x1)

u <- rnorm(500)

y <- 0.5 + 1.2*x1 - 0.7*x2 + u

# Produce weights (shouldn't matter how)

w <- x1/sd(x1)

# Manual WLS

y_WLS <- y*sqrt(w)

x1_WLS <- x1*sqrt(w)

x2_WLS <- x2*sqrt(w)

summary(lm(y_WLS ~ x1_WLS + x2_WLS))

# Automatic WLS

summary(lm(y ~ x1+x2, weights=w))

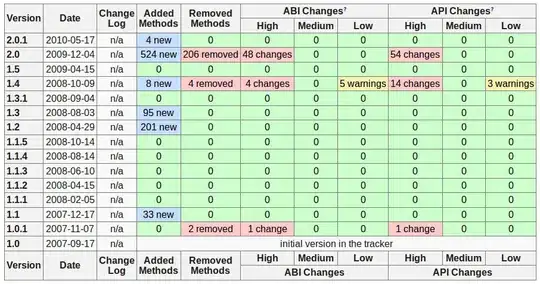

The two commands give a different result. It should be exactly the same. I am just following the instructions from Wooldridge (2019), section 8.4a, which relevant bits I have combined in the image below:

As you can read, WLS with weight w is equivalent to running OLS in a transformed model where each variable has been multiplied by the squared root of w, which is what I do above. Why the difference then?