Update

This is now officially supported by keras-cv.

To create a class label in CutMix or MixUp type augmentation, we can use beta such as np.random.beta or scipy.stats.beta and do as follows for two labels:

label = label_one*beta + (1-beta)*label_two

But what if we've more than two images? In YoLo4, they've tried an interesting augmentation called Mosaic Augmentation for object detection problems. Unlike CutMix or MixUp, this augmentation creates augmented samples with 4 images. In object detection cases, we can compute the shift of each instance co-ords and thus possible to get the proper ground truth, here. But for only image classification cases, how can we do that?

Here is a starter.

import tensorflow as tf

import matplotlib.pyplot as plt

import random

(train_images, train_labels), (test_images, test_labels) = \

tf.keras.datasets.cifar10.load_data()

train_images = train_images[:10,:,:]

train_labels = train_labels[:10]

train_images.shape, train_labels.shape

((10, 32, 32, 3), (10, 1))

Here is a function we've written for this augmentation; ( too ugly with an `inner-outer loop! Please suggest if we can do it efficiently.)

def mosaicmix(image, label, DIM, minfrac=0.25, maxfrac=0.75):

'''image, label: batches of samples

'''

xc, yc = np.random.randint(DIM * minfrac, DIM * maxfrac, (2,))

indices = np.random.permutation(int(image.shape[0]))

mosaic_image = np.zeros((DIM, DIM, 3), dtype=np.float32)

final_imgs, final_lbs = [], []

# Iterate over the full indices

for j in range(len(indices)):

# Take 4 sample for to create a mosaic sample randomly

rand4indices = [j] + random.sample(list(indices), 3)

# Make mosaic with 4 samples

for i in range(len(rand4indices)):

if i == 0: # top left

x1a, y1a, x2a, y2a = 0, 0, xc, yc

x1b, y1b, x2b, y2b = DIM - xc, DIM - yc, DIM, DIM # from bottom right

elif i == 1: # top right

x1a, y1a, x2a, y2a = xc, 0, DIM , yc

x1b, y1b, x2b, y2b = 0, DIM - yc, DIM - xc, DIM # from bottom left

elif i == 2: # bottom left

x1a, y1a, x2a, y2a = 0, yc, xc, DIM

x1b, y1b, x2b, y2b = DIM - xc, 0, DIM, DIM-yc # from top right

elif i == 3: # bottom right

x1a, y1a, x2a, y2a = xc, yc, DIM, DIM

x1b, y1b, x2b, y2b = 0, 0, DIM-xc, DIM-yc # from top left

# Copy-Paste

mosaic_image[y1a:y2a, x1a:x2a] = image[i,][y1b:y2b, x1b:x2b]

# Append the Mosiac samples

final_imgs.append(mosaic_image)

return final_imgs, label

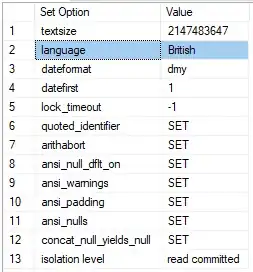

The augmented samples, currently with the wrong labels.

data, label = mosaicmix(train_images, train_labels, 32)

plt.imshow(data[5]/255)

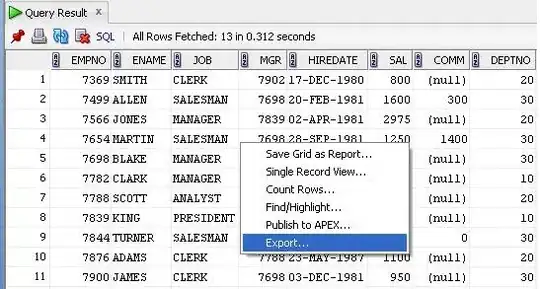

However, here are some more examples to motivate you. Data is from the Cassava Leaf competition.

(source: googleapis.com)

(source: googleapis.com)