With the help of @JaSON, here's a code that enables me to get the data in the table from local html and the code uses selenium

from selenium import webdriver

driver = webdriver.Chrome("C:/chromedriver.exe")

driver.get('file:///C:/Users/Future/Desktop/local.html')

counter = len(driver.find_elements_by_id("Section3"))

xpath = "//div[@id='Section3']/following-sibling::div[count(preceding-sibling::div[@id='Section3'])={0} and count(following-sibling::div[@id='Section3'])={1}]"

print(counter)

for i in range(counter):

print('\nRow #{} \n'.format(i + 1))

_xpath = xpath.format(i + 1, counter - (i + 1))

cells = driver.find_elements_by_xpath(_xpath)

for cell in cells:

value = cell.find_element_by_xpath(".//td").text

print(value)

How can these rows converted to be valid table that I can export to csv file? Here's the local HTML link https://pastebin.com/raw/hEq8K75C

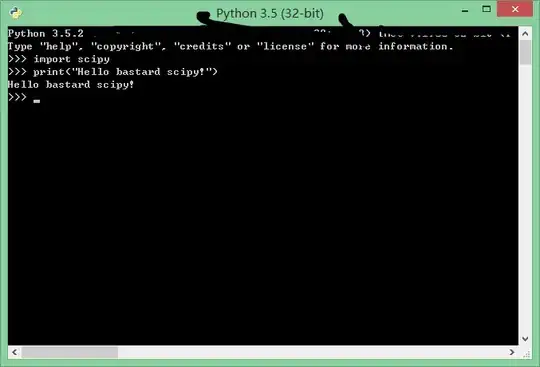

** @Paul Brennan: After trying to edit counter to be counter-1 I got 17 rows to skip the error of row 18 temporarily, I got the filename.txt and here's snapshot of the output