Edit, 17.12.2020: Using preprocessing now it recognizes all, but the "O" in CREO. See the stages in ocr8.py. Then ocr9.py demonstrates (but not automated yet) finding the lines of text by the coordinates returned from pytesseract.image_to_boxes(), approcimate size of the letters and inter-symbol distance, then extrapolating one step ahead and searching for a single character (--psm 8).

It happened that Tesseract had actually recognized the "O" in CREO, but it read it as ♀, probably confused by the little "k" below etc.

Since it is a rare and "strange"/unexpected symbol, it could be corrected - replaced automatically (see the function Correct()).

There is a technical detail: Tesseract returns the ANSI/ASCII symbol 12, (0x0C) while the code in my editor was in Unicode/UTF-8 - 9792. So I coded it inside as chr(12).

The latest version: ocr9.py

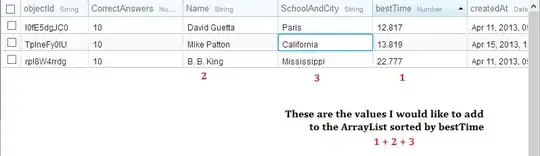

You mentioned that PRATOLA and PELIGNA have to be given sepearately - just split by " ":

splitted = text.split(" ")

RECOGNIZED

CUCINE

LUBE

STORE

PRATOLA PELIGNA

CRE [+O with correction and extrapolation of the line]

KITCHENS

...

C 39 211 47 221 0

U 62 211 69 221 0

C 84 211 92 221 0

I 107 211 108 221 0

N 123 211 131 221 0

E 146 211 153 221 0

L 39 108 59 166 0

U 63 107 93 166 0

B 98 108 128 166 0

E 133 108 152 166 0

S 440 134 468 173 0

T 470 135 499 173 0

O 500 134 539 174 0

R 544 135 575 173 0

E 580 135 608 173 0

P 287 76 315 114 0

R 319 76 350 114 0

A 352 76 390 114 0

T 387 76 417 114 0

O 417 75 456 115 0

L 461 76 487 114 0

A 489 76 526 114 0

P 543 76 572 114 0

E 576 76 604 114 0

L 609 76 634 114 0

I 639 76 643 114 0

G 649 75 683 115 0

N 690 76 722 114 0

A 726 76 764 114 0

C 21 30 55 65 0

R 62 31 93 64 0

E 99 31 127 64 0

K 47 19 52 25 0

I 61 19 62 25 0

T 71 19 76 25 0

C 84 19 89 25 0

H 96 19 109 25 0

E 113 19 117 25 0

N 127 19 132 25 0

S 141 19 145 22 0

These are from getting "boxes".

Initial message:

I guess that for the area where "cucine" is, an adaptive threshold may segment it better or maybe applying some edge detection first.

Kitchens seems very small, what about trying to enlarge that area/distance.

For the CREO, I guess it's confused with the big and small size of adjacent captions.

For the "O" in creo, you may apply dilate in order to close the gap of the "O".

Edit: I played a bit, but without Tesseract and it needs more work. My goal was to make the letters more contrasting, may need some of these processings to be applied selectively only on the Cucine, maybe applying the recognition in two passes. When getting those partial words "Cu", apply adaptive threshold etc. (below) and OCR on a top rectangle around "CU..."

Binary Threshold:

Adaptive Threshold, Median blur (to clean noise) and invert:

Dilate connects small gaps, but it also destroys detail.

import cv2

import numpy as np

#pytesseract.pytesseract.tesseract_cmd = r'D:\Program Files\pytesseract\tesseract.exe'

path_to_image = "logo.png"

#path_to_image = "logo1.png"

image = cv2.imread(path_to_image)

h, w, _ = image.shape

w*=3; h*=3

w = (int)(w); h = (int) (h)

image = cv2.resize(image, (w,h), interpolation = cv2.INTER_AREA) #Resize 3 times

# converting image into gray scale image

gray_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

cv2.imshow('grey image', gray_image)

cv2.waitKey(0)

# converting it to binary image by Thresholding

# this step is require if you have colored image because if you skip this part

# then tesseract won't able to detect text correctly and this will give incorrect result

#threshold_img = cv2.threshold(gray_image, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)[1]

# display image

threshold_img = cv2.adaptiveThreshold(gray_image, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C,

cv2.THRESH_BINARY,13,3) #cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11,2)[1]

cv2.imshow('threshold image', threshold_img)

cv2.waitKey(0)

#threshold_img = cv2.GaussianBlur(threshold_img,(3,3),0)

#threshold_img = cv2.GaussianBlur(threshold_img,(3,3),0)

threshold_img = cv2.medianBlur(threshold_img,5)

cv2.imshow('medianBlur', threshold_img)

cv2.waitKey(0)

threshold_img = cv2.bitwise_not(threshold_img)

cv2.imshow('Invert', threshold_img)

cv2.waitKey(0)

#kernel = np.ones((1, 1), np.uint8)

#threshold_img = cv2.dilate(threshold_img, kernel)

#cv2.imshow('Dilate', threshold_img)

#cv2.waitKey(0)

cv2.imshow('threshold image', thrfeshold_img)

# Maintain output window until user presses a key

cv2.waitKey(0)

# Destroying present windows on screen

cv2.destroyAllWindows()

# now feeding image to tesseract

text = pytesseract.image_to_string(threshold_img)

print(text)