I'm try to solve a multi-linear-regression problem with a very simple linear network. The network only consists of a single dense layer as its output layer and the activation function is set to linear. I synthesize the output data Y by multiplying the input data X by the system (weight) matrix A: Y=A.X . Both X and A contain random numbers with normal or uniform distributions (the problem happens regardless). In this case, the network reaches above 99% accuracy in only 7 Epochs over 1000 samples as one would expect.

Now, if I synthesize X from Y, which this time around has uniform random numbers, using A's inverse: X = inv(A).Y , and try to train the network, after two hundred Epochs, the accuracy only reaches 94%.

Why is there such a huge disparity between the two cases even-though the system matrix (weights) is exactly the same. The only difference is related to the random distribution of X and Y. If I'm forced to follow the second case, how can I improve the trainability of my network so that it can be trained in few epochs.

I have tried different optimizers, initializers and regularizations but they didn't help.

Here's the code for the version that doesn't converge so well. To get the first version I use gen1 in Dataset.from_generator(gen2, ...) instead of gen2.

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

import keras

N = 256

np.random.seed(0)

A = np.random.normal(0,.4,(N,N))

Ainv = np.linalg.inv(A)

import itertools

input_size = N

def gen1():

for i in itertools.count(1):

X = np.random.rand(N,1)-.5

Y = np.dot(A,X)

yield (X[:,0],Y[:,0])

def gen2():

for i in itertools.count(1):

Y = np.random.rand(N,1)-0.5

X = np.dot(Ainv,Y)

yield (X[:,0],Y[:,0])

dataset = tf.data.Dataset.from_generator(

gen2,

(tf.float64, tf.float64),

(tf.TensorShape([N]), tf.TensorShape([N])))

train_ds = dataset.take(950)

valid_ds = dataset.skip(950).take(50)

#train_ds = train_ds.shuffle(2000, reshuffle_each_iteration = True)

train_ds = train_ds.batch(1)

valid_ds = valid_ds.batch(1)

from keras.layers import Input, Dense

from keras.models import Model

from keras import backend

def rabs(y_t, y_p):

return backend.mean(backend.abs(y_p - y_t), axis=-1)/(tf.keras.backend.max(y_t) - tf.keras.backend.min(y_t))*100

inp = Input(shape=(input_size,))

out = Dense(N, activation='linear')(inp)

autoencoder = Model(inp, out)

#opt = tf.keras.optimizers.Adam(learning_rate=.0001)

opt = tf.keras.optimizers.SGD(learning_rate=.2, momentum=0.7)

autoencoder.compile(optimizer= opt,

loss=tf.keras.losses.MeanSquaredError(),metrics= [rabs])

autoencoder.summary()

autoen_model = autoencoder.fit(train_ds, validation_data = valid_ds, epochs = 200)

plt.plot(autoen_model.history['rabs'])

plt.plot(autoen_model.history['val_rabs'])

plt.title('Model Accuracy')

plt.ylabel('Relative Absolute Mean Error %')

plt.xlabel('Epoch')

plt.legend(['Training set', 'Validation set'], loc='upper left')

plt.show()

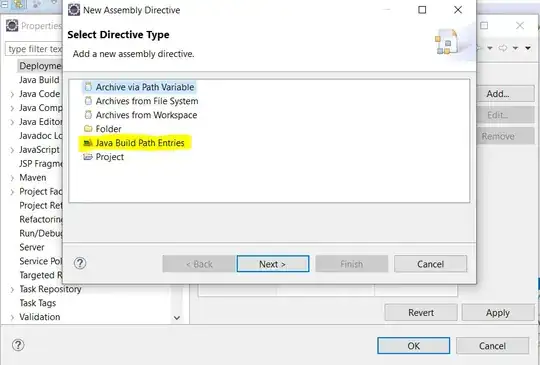

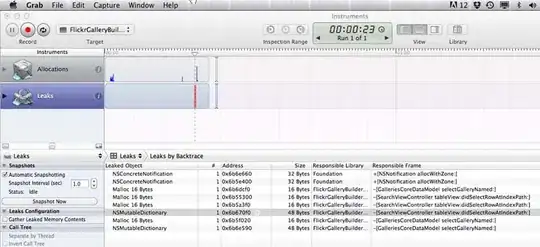

Training graphs

Case 1: Y synthesized

Case 2: X synthesized