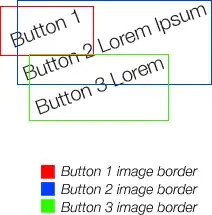

I am making a model in TensorFlow, I detected that there was overfitting and after modifying the parameters of the model, it seems that there is no longer overfitting but I am not sure. I show you the two graphs that I get after the Tensorboard training.

epoch_loss:

epoch_accuracy:

After changing the Smoothing parameter on the side of TensorFlow it shows me the accuracy graph:

I have two questions I want to ask you.

- What is the Smoothing parameter for?

- Do you see the model's behavior during training?

Thanks to all of you.