In this paper, a very simple model is described to illustrate how the ant colony algorithm works. In short, it assumes two nodes which are connected via two links one of which is shorter. Then, given a pheromone increment and a pheromone evaporation dynamics, one expects that all ants eventually pick the shorter path.

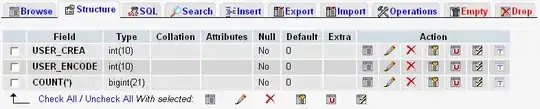

Now, I'm trying to replicate the simulation of this paper corresponding to scenario above whose result should be (more or less) like below.

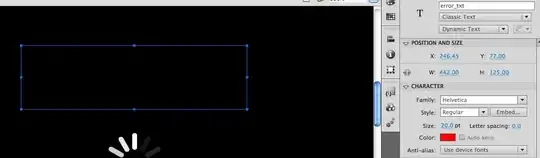

Here is an implementation of mine (taking the same specification as that of the test above).

import random

import matplotlib.pyplot as plt

N = 10

l1 = 1

l2 = 2

ru = 0.5

Q = 1

tau1 = 0.5

tau2 = 0.5

epochs = 150

success = [0 for x in range(epochs)]

def compute_probability(tau1, tau2):

return tau1/(tau1 + tau2), tau2/(tau1 + tau2)

def select_path(prob1, prob2):

if prob1 > prob2:

return 1

if prob1 < prob2:

return 2

if prob1 == prob2:

return random.choice([1,2])

def update_accumulation(link_id):

global tau1

global tau2

if link_id == 1:

tau1 += Q / l1

return tau1

if link_id == 2:

tau2 += Q / l2

return tau2

def update_evapuration():

global tau1

global tau2

tau1 *= (1-ru)

tau2 *= (1-ru)

return tau1, tau2

def report_results(success):

plt.plot(success)

plt.show()

for epoch in range(epochs-1):

temp = 0

for ant in range(N-1):

prob1, prob2 = compute_probability(tau1, tau2)

selected_path = select_path(prob1,prob2)

if selected_path == 1:

temp += 1

update_accumulation(selected_path)

update_evapuration()

success[epoch] = temp

report_results(success)

However, what I get is fairly weird as below.

It seems that my understanding of how pheromone should be updated is flawed.

So, can one address what I am missing in this implementation?