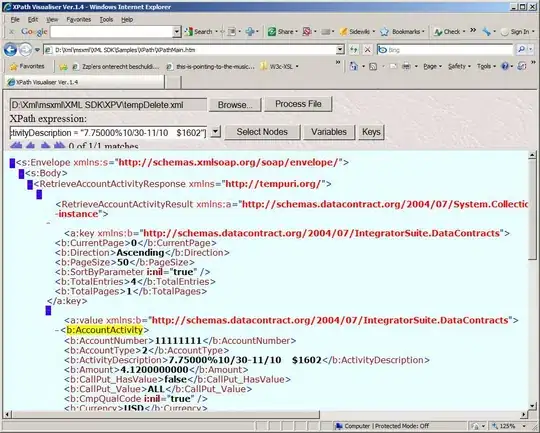

I am training a ConvLstm Model with the sequence size of (20, 230, 230, 3), which means there are 20 images per sequence and each size 230 * 230 * 3, however, when I tried to increase the sequence size to (20, 240, 240, 3), Tensorflow OOM error appeared. I train my model in my machine with four RTX 2080 Ti GPUs and Intel CORE i9 CPU, So I tried to split my model into four parts following this toturial, I truly did but when I resize sequence into (20, 240, 240, 3), The OOM error still occures, then I used nivida smi tring to obverse GPU using and get following results,I found that GPU memory using almost ran out.

So I hope to know why this model still so large after dividing into four parts? Before dividing, the memory use in GPU0 is almost 9400MB, strangely, after dividing, four of the GPU memory use are almost 9400MB, is it means my model turns to 4 * 9400 MB?

PS:environment: Python3.6 + TF1.12.0 Batch size: 2