I have 2 questions regarding the resident memory used by a Java application.

Some background details:

- I have a java application set up with -Xms2560M -Xmx2560M.

- The java application is running in a container. k8s allows the container to consume up to 4GB.

The issue:

Sometimes the process is restarted by k8s, error 137, apparently the process has reached 4GB.

Application behaviour:

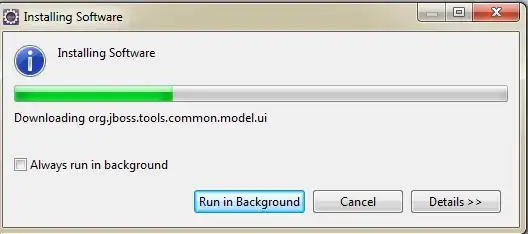

- Heap: the application seems to work in a way where all memory is used, then freed, then used and so on.

This snapshot illustrates it. The Y column is the free heap memory. (extracted by the application by ((double)Runtime.getRuntime().freeMemory()/Runtime.getRuntime().totalMemory())*100

)

I was also able to confirm it using HotSpotDiagnosticMXBean which allows creating a dump with reachable objects and one that also include unreachable objects.

The one with the unreachable was at the size of the XMX.

In addition, this is also what i see when creating a dump on the machine itself, the resident memory can show 3GB while the size of the dump is 0.5GB. (taken with jcmd)

First question:

Is this be behaviour reasonable or indicates a memory usage issue? It doesn't seem like a typical leak.

Second question

I have seen more questions, trying to understand what the resident memory, used by the application, is comprised of.

Worth mentioning:

Java using much more memory than heap size (or size correctly Docker memory limit)

And

Native memory consumed by JVM vs java process total memory usage

Not sure if any of this can account for 1-1.5 GB between the XMX and the 4GB k8s limit.

If you were to provide some sort of a check list to close in on the problem what will it be? (feels like i can't see the forest for the trees)

Any free tools that can help? (beside the ones for analysing a memory dump)