As a vector grows, it sometimes needs to relocate all the data. If you can avoid that, it'll often be faster.

One way is to reserve the number of elements you know that you need. Another is to create the vector with the correct number of elements from start.

vector<int> v(n); // created with n elements

for(auto& x : v) cin >> x; // a range-based for loop (no push_back needed)

//sort(v.begin(), v.begin() + n); // no, why begin() + n? Do:

sort(v.begin(), v.end());

for(auto x : v) cout << x; // another range-based for loop

One thing that could possibly speed up the sorting (requires C++17) is to use the overload that accepts an ExecutionPolicy and see if the implementation has support for sorting in parallel:

#include <execution>

//...

sort(std::execution::par, v.begin(), v.end()); // try sorting in parallel

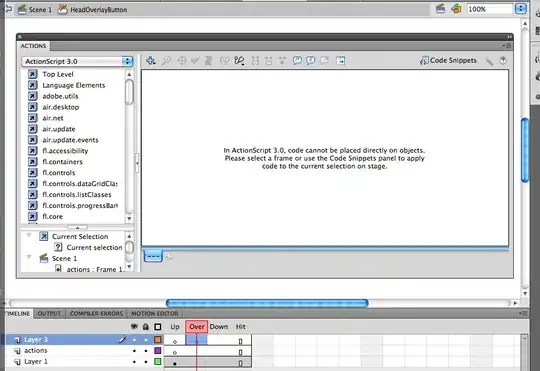

I created a quick-bench test for three cases:

- Not using the knowledge of

n to reserve space at all, letting it realloc.

- Using

reserve(n)

- Using

n to create v with the right size at construction

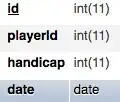

Result:

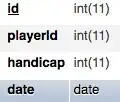

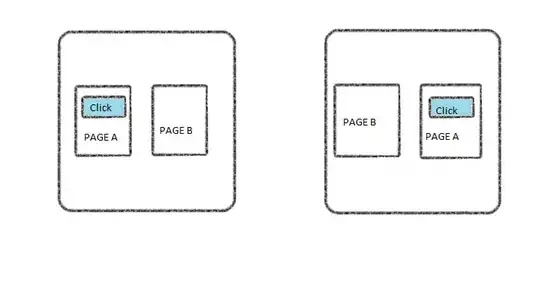

Initialization of a vector does come with some cost though. Here's a comparison between the winner of the above and using a unique_ptr<int[]> created from a raw new[]:

To sum it up: Sorting may be sped up by using parallel sorting and filling1 your v can be made ~26 times faster than your current implementation does it:

1 - By filling, I'm referring to the actual filling of v, which does not include reading the values