I'm trying to extract the all three views (Axial, Sagittal and Coronal) from a CTA in DICOM format, using the SimpleItk library.

I can correctly read the series from a given directory:

...

import SimpleITK as sitk

...

reader = sitk.ImageSeriesReader()

dicom_names = reader.GetGDCMSeriesFileNames(input_dir)

reader.SetFileNames(dicom_names)

# Execute the reader

image = reader.Execute()

...

and then, using numpy arrays as stated in this questions, I'm able to extract and save the 3 views.

...

image_array = sitk.GetArrayFromImage(image)

...

for i in range(image_array.shape[0]):

output_file_name = axial_out_dir + 'axial_' + str(i) + '.png'

logging.debug('Saving image to ' + output_file_name)

imageio.imwrite(output_file_name, convert_img(image_array[i, :, :], axial_min, axial_max), format='png')

...

The other 2 are made by saving image_array[:, i, :] and image_array[:, :, i], while convert_img(..) is a function that only converts the data type, so it does not alter any shape.

However, the coronal and sagittal views are stretched, rotated and with wide black band (in some slice they are very wide).

Here's the screenshot from Slicer3d:

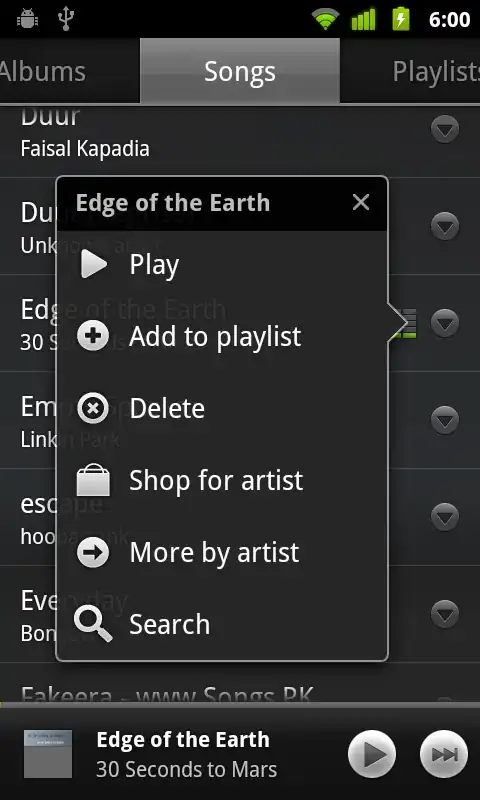

while this is the output of my code:

Axial

Sagittal

Coronal

Image shape is 512x512x1723, which result in axial pngs being 512x512 pixel, coronal and sagittal being 512x1723, thus this seems correct.

Should I try using PermuteAxes filter? The problem is that I was not able to find any documentation regarding its use in python (neither in other language due to 404 in documentation page)

There is also a way to improve the contrast? I have used the AdaptiveHistogramEqualization filter from simpleitk but it's way worse than Slicer3D visualization, other than being very slow.

Any help is appreciated, thank you!