I want to stitch some images(identical resolution) into a panorama using openCV(without using the Stitcher class). I tried the algorithm described here, but instead of the desired panorama i get an image that is made up of the last image to be stitched and a large black area. I outputted am image for each iteration and the result is the same: current image + a larger black area each time.

import numpy

import cv2

# images is an array of images that i need to stitch

matcher = cv2.DescriptorMatcher_create(cv2.DescriptorMatcher_BRUTEFORCE_HAMMING)

ORB = cv2.ORB_create()

homography = 0

panorama = 0

for image in range(0, len(images) -1):

key1, desc1 = ORB.detectAndCompute(images[image], None)

key2, desc2 = ORB.detectAndCompute(images[image + 1], None)

matches = matcher.match(desc1, desc2, None)

matches = sorted(matches, key=lambda x: x.distance, reverse=True)

numGoodMatches = int(len(matches) * 0.15)

matches2 = matches[-numGoodMatches:]

points1 = numpy.zeros((len(matches2), 2), dtype=numpy.float32)

points2 = numpy.zeros((len(matches2), 2), dtype=numpy.float32)

for i, match in enumerate(matches2):

points1[i, :] = key1[match.queryIdx].pt

points2[i, :] = key2[match.trainIdx].pt

h, mask = cv2.findHomography(points2, points1, cv2.RANSAC)

if isinstance(homography, int):

homography = h

img1H, img1W = images[image].shape

img2H, img2W = images[image + 1].shape

aligned = cv2.warpPerspective(images[image + 1], h, (img1W + img2W, img2H))

stitchedImage = numpy.copy(aligned)

stitchedImage[0:img1H, 0:img2W] = images[image]

panorama = stitchedImage

else:

h *= homography

homography = h

img1H, img1W = panorama.shape

img2H, img2W = images[image + 1].shape

aligned = cv2.warpPerspective(images[image + 1], h, (img1W + img2W, img2H))

stitchedImage = numpy.copy(aligned)

stitchedImage[0:img1H, 0:img2W] = images[image + 1]

panorama = stitchedImage

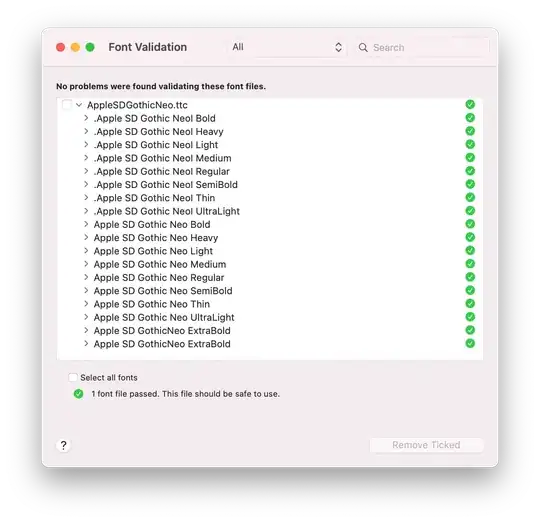

Example of images i get:

The first image is correct.

The last image has the correct width (n * original width) but only one image and the rest is black area.