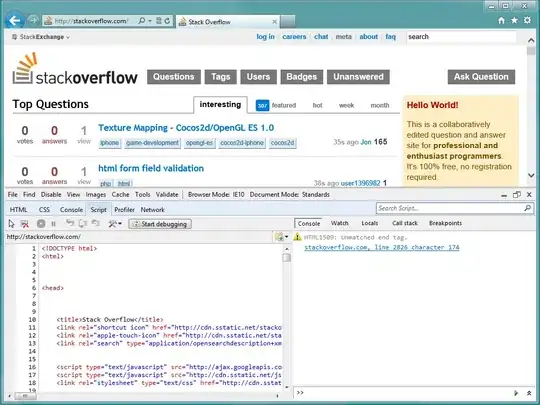

It is always useful to use your browser's "developer tools" to inspect the web page and figure out how to extract the information you need.

A couple of tutorials that explain this I just googled:

https://towardsdatascience.com/tidy-web-scraping-in-r-tutorial-and-resources-ac9f72b4fe47

https://www.scrapingbee.com/blog/web-scraping-r/

For example, in this particular webpage, when we select a new date in the dropdown list, the webpage sends a GET request to the server, which returns a JSON string with the data of the requested date. Then the webpage updates the data in the table (probably using javascript -- did not check this).

So, in this case you need to emulate this behavior, capture the json file and parse the info in it.

In Chrome, if you look at the developer tool network pane, you will see that the address of the GET request is of the form:

https://www.timeanddate.com/scripts/cityajax.php?n=sweden/stockholm&mode=historic&hd=YYYYMMDD&month=M&year=YYYY&json=1

where YYYY stands for year with 4 digits, MM(M) month with two (one) digits, and DD day of the month with two digits.

So you can set your code to do the GET request directly to this address, get the json response and parse it accordingly.

library(rjson)

library(rvest)

library(plyr)

library(dplyr)

year <- 2020

month <- 3

day <- 7

# create formatted url with desired dates

url <- sprintf('https://www.timeanddate.com/scripts/cityajax.php?n=sweden/stockholm&mode=historic&hd=%4d%02d%02d&month=%d&year=%4d&json=1', year, month, day, month, year)

webpage <- read_html(url) %>% html_text()

# json string is not formatted the way fromJSON function needs

# so I had to parse it manually

# split string on each row

x <- strsplit(webpage, "\\{c:")[[1]]

# remove first element (garbage)

x <- x[2:length(x)]

# clean last 2 characters in each row

x <- sapply(x, FUN=function(xx){substr(xx[1], 1, nchar(xx[1])-2)}, USE.NAMES = FALSE)

# function to get actual data in each row and put it into a dataframe

parse.row <- function(row.string) {

# parse columns using '},{' as divider

a <- strsplit(row.string, '\\},\\{')[[1]]

# remove some lefover characters from parsing

a <- gsub('\\[\\{|\\}\\]', '', a)

# remove what I think is metadata

a <- gsub('h:', '', gsub('s:.*,', '', a))

df <- data.frame(time=a[1], temp=a[3], weather=a[4], wind=a[5], humidity=a[7],

barometer=a[8])

return(df)

}

# use ldply to run function parse.row for each element of x and combine the results in a single dataframe

df.final <- ldply(x, parse.row)

Result:

> head(df.final)

time temp weather wind humidity barometer

1 "12:20 amSat, Mar 7" "28 °F" "Passing clouds." "No wind" "100%" "29.80 \\"Hg"

2 "12:50 am" "28 °F" "Passing clouds." "No wind" "100%" "29.80 \\"Hg"

3 "1:20 am" "28 °F" "Passing clouds." "1 mph" "100%" "29.80 \\"Hg"

4 "1:50 am" "30 °F" "Passing clouds." "2 mph" "100%" "29.80 \\"Hg"

5 "2:20 am" "30 °F" "Passing clouds." "1 mph" "100%" "29.80 \\"Hg"

6 "2:50 am" "30 °F" "Low clouds." "No wind" "100%" "29.80 \\"Hg"

I left everything as strings in the data frame, but you can convert the columns to numeric or dates with you need.