I made a script to validate and clean a complex DataFrame with calls to a database. I divided the script into modules. I import them frequently between files to use certain functions and variables that are spread across the different files and directories.

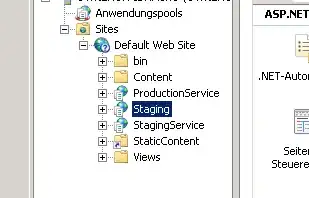

Please see image to see how files are distributed across directories:

Directory structure

An example would look like this:

# Module to clean addresses: address_cleaner.py

def address_cleaner(dataframe):

...

return dataframe

# Pipeline to run all cleaning functions in order looks like: pipeline.py

from file1 import function1

...

def pipeline():

df = function1

...function2(df)

...function3(df)

...

return None

# Executable file where I request environment variables and run pipeline: exe.py

from pipeline import pipeline

import os

...

pipeline()

...

When I run this on Unix:

% cd myproject

% python executable.py

This is one of the import cases, which I import to avoid hardcoding environment variable string names:

File "path/to/executable.py", line 1, in <module>

from environment_constants import SSH_USERNAME, SSH_PASSWORD, PROD_USERNAME, PROD_PASSWORD

ModuleNotFoundError: No module named 'environment_constants

I get a ModuleNotFoundError when I run executable.py on Unix, that calls the pipeline shown above and it seems as if all the imports that I did between files to use a function, variable or constant from them, especially those in different directories didn't reach to each other. These directories all belong to the same parent directory "CD-cleaner".

Is there a way to make these files read from each other even if they are in different folders of the script?

Thanks in advance.