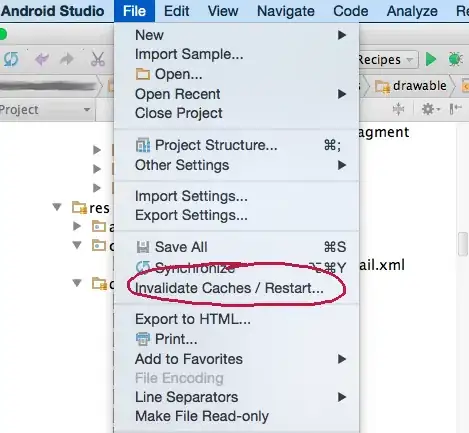

I took an hour to whip up a basic sample for you. It's in C# using my own audio I/O library XT-Audio (so plug intended) but using raw wasapi in C++ it'd probably take me half a day. Anyway i believe this comes really close to what you're looking for. As you see below this app has the world's most awesome GUI:

(source: github.io)

As soon as you press start, the app starts translating keyboard input to audio. You can press & hold the c, d, e, f and g keyboard keys to generate musical notes. It handles multiple overlapping notes (chords), too. I chose to select wasapi shared mode as the backend because it supports floating point audio, but this will work just as well with exclusive mode if you translate the audio to 16-bit integer format.

A difference when working with this library vs raw wasapi, is that the audio thread is managed by the library and the application gets it's audio callback function invoked periodically to synthesize audio data. However this translates easily back to native wasapi using c++: just call IAudioRenderClient::GetBuffer/ReleaseBuffer in a loop on a background thread, and do your processing in between these calls.

Anyway the key part is this: this app only uses 2 threads, one for UI (managed by winforms), and one for audio (managed by the audio library), and yet it is capable of playing multiple musical notes simultaneously, which i believe is at the heart of your question.

I uploaded the full visual studio solution and binaries here: WasapiSynthSample but for completeness i'll post the interesting parts of the code below.

using System;

using System.Threading;

using System.Windows.Forms;

using Xt;

namespace WasapiSynthSample

{

public partial class Program : Form

{

// sampling rate

const int Rate = 48000;

// stereo

const int Channels = 2;

// default format for wasapi shared mode

const XtSample Sample = XtSample.Float32;

// C, D, E, F, G

static readonly float[] NoteFrequencies = { 523.25f, 587.33f, 659.25f, 698.46f, 783.99f };

[STAThread]

static void Main()

{

// initialize audio library

using (var platform = XtAudio.Init(null, IntPtr.Zero, null))

{

Application.EnableVisualStyles();

Application.SetCompatibleTextRenderingDefault(false);

Application.ThreadException += OnApplicationThreadException;

AppDomain.CurrentDomain.UnhandledException += OnCurrentDomainUnhandledException;

Application.Run(new Program(platform));

}

}

// pop a messagebox on any error

static void OnApplicationThreadException(object sender, ThreadExceptionEventArgs e)

=> OnError(e.Exception);

static void OnCurrentDomainUnhandledException(object sender, UnhandledExceptionEventArgs e)

=> OnError((Exception)e.ExceptionObject);

static void OnError(Exception e)

{

var text = e.ToString();

if (e is XtException xte) text = XtAudio.GetErrorInfo(xte.GetError()).ToString();

MessageBox.Show(text);

}

XtStream _stream;

readonly XtPlatform _platform;

// note phases

readonly float[] _phases = new float[5];

// tracks key down/up

readonly bool[] _notesActive = new bool[5];

public Program(XtPlatform platform)

{

_platform = platform;

InitializeComponent();

}

// activate note

protected override void OnKeyDown(KeyEventArgs e)

{

base.OnKeyDown(e);

if (e.KeyCode == Keys.C) _notesActive[0] = true;

if (e.KeyCode == Keys.D) _notesActive[1] = true;

if (e.KeyCode == Keys.E) _notesActive[2] = true;

if (e.KeyCode == Keys.F) _notesActive[3] = true;

if (e.KeyCode == Keys.G) _notesActive[4] = true;

}

// deactive note

protected override void OnKeyUp(KeyEventArgs e)

{

base.OnKeyUp(e);

if (e.KeyCode == Keys.C) _notesActive[0] = false;

if (e.KeyCode == Keys.D) _notesActive[1] = false;

if (e.KeyCode == Keys.E) _notesActive[2] = false;

if (e.KeyCode == Keys.F) _notesActive[3] = false;

if (e.KeyCode == Keys.G) _notesActive[4] = false;

}

// stop stream

void OnStop(object sender, EventArgs e)

{

_stream?.Stop();

_stream?.Dispose();

_stream = null;

_start.Enabled = true;

_stop.Enabled = false;

}

// start stream

void OnStart(object sender, EventArgs e)

{

var service = _platform.GetService(XtSystem.WASAPI);

var id = service.GetDefaultDeviceId(true);

using (var device = service.OpenDevice(id))

{

var mix = new XtMix(Rate, Sample);

var channels = new XtChannels(0, 0, Channels, 0);

var format = new XtFormat(in mix, in channels);

var buffer = device.GetBufferSize(in format).current;

var streamParams = new XtStreamParams(true, OnBuffer, null, null);

var deviceParams = new XtDeviceStreamParams(in streamParams, in format, buffer);

_stream = device.OpenStream(in deviceParams, null);

_stream.Start();

_start.Enabled = false;

_stop.Enabled = true;

}

}

// this gets called on the audio thread by the audio library

// but could just as well be your c++ code managing its own threads

unsafe int OnBuffer(XtStream stream, in XtBuffer buffer, object user)

{

// process audio buffer of N frames

for (int f = 0; f < buffer.frames; f++)

{

// compose current sample of all currently active notes

float sample = 0.0f;

for (int n = 0; n < NoteFrequencies.Length; n++)

{

if (_notesActive[n])

{

_phases[n] += NoteFrequencies[n] / Rate;

if (_phases[n] >= 1.0f) _phases[n] = -1.0f;

float noteSample = (float)Math.Sin(2.0 * _phases[n] * Math.PI);

sample += noteSample / NoteFrequencies.Length;

}

}

// write current sample to output buffer

for (int c = 0; c < Channels; c++)

((float*)buffer.output)[f * Channels + c] = sample;

}

return 0;

}

}

}