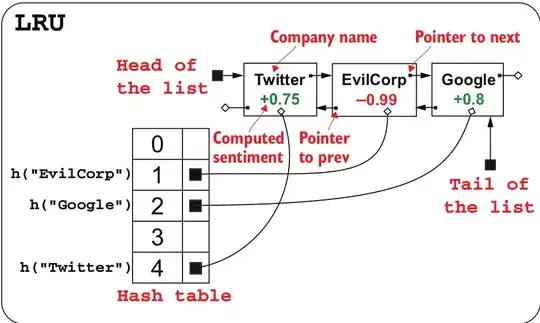

This Answer was the key to solve this problem.

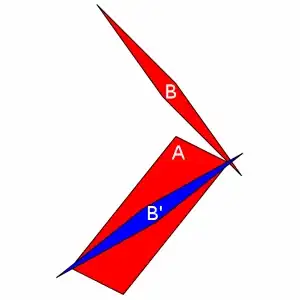

Coords:

[[(38, 11), (251, 364)], [(254, 62), (592, 266)], [(254, 312),

(592, 518)], [(46, 456), (247, 797)], [(346, 557), (526, 797)]]

import numpy as np

import matplotlib.pyplot as plt

import cv2

import itertools

#====================================================

img = cv2.imread('input.jpg', 0)

blur = cv2.blur(img,(3,3))

blur[blur>225] = 0

sobelx = cv2.Sobel(blur,cv2.CV_64F,1,0,ksize=5)

sobely = cv2.Sobel(blur,cv2.CV_64F,0,1,ksize=5)

sobel = np.sqrt( sobelx**2 + sobely**2)

sobel = (255 * sobel)/(sobel.max() - sobel.min())

sobel = sobel.astype(np.uint8)

sobel[sobel<20] = 0

sobel[sobel>20] = 255

#====================================================

_,thresh = cv2.threshold(blur,127,255,1)

thresh = thresh + sobel

median = cv2.medianBlur(thresh,3)

gray_scale = median.copy()

image = np.stack([img, img, img], axis=2)

img_bin = cv2.Canny(gray_scale,50,110)

dil_kernel = np.ones((3,3), np.uint8)

img_bin=cv2.dilate(img_bin,dil_kernel,iterations=1)

line_min_width = 7

kernal_h = np.ones((2,line_min_width), np.uint8)

img_bin_h = cv2.morphologyEx(img_bin, cv2.MORPH_OPEN, kernal_h)

kernal_v = np.ones((line_min_width,1), np.uint8)

img_bin_v = cv2.morphologyEx(img_bin, cv2.MORPH_OPEN, kernal_v)

img_bin_final=img_bin_h|img_bin_v

final_kernel = np.ones((3,3), np.uint8)

img_bin_final=cv2.dilate(img_bin_final,final_kernel,iterations=1)

_, _, stats, _ = cv2.connectedComponentsWithStats(~img_bin_final, connectivity=8, ltype=cv2.CV_32S)

coords = []

### 1 and 0 and the background and residue connected components whihc we do not require

for x,y,w,h,area in stats[2:]:

if area>15000:

coords.append([(x,y),(x+w,y+h)])

def bb_intersection(coords, boxA, boxB):

# determine the (x, y)-coordinates of the intersection rectangle

xA = max(boxA[0][0], boxB[0][0])

yA = max(boxA[0][1], boxB[0][1])

xB = min(boxA[1][0], boxB[1][0])

yB = min(boxA[1][1], boxB[1][1])

# compute the area of intersection rectangle

interArea = max(0, xB - xA + 1) * max(0, yB - yA + 1)

# compute the area of both rectangles

boxAArea = (boxA[1][0] - boxA[0][0] + 1) * (boxA[1][1] - boxA[0][1] + 1)

boxBArea = (boxB[1][0] - boxB[0][0] + 1) * (boxB[1][1] - boxB[0][1] + 1)

if(interArea == boxAArea):

coords.remove(boxA)

elif(interArea == boxBArea):

coords.remove(boxB)

#

for boxa, boxb in itertools.combinations(coords, 2):

bb_intersection(coords, boxa, boxb)

for coord in coords:

cv2.rectangle(image,coord[0],coord[1],(0,255,0),1)

print(coords)

plt.imshow(image)

plt.title("There are {} images".format(len(coords)))

plt.axis('off')

plt.show()

Edit:

This Answer isn't a general solution, the parameters has to be tuned accordingly, for the original image change this block, and the code will work perfectly:

img = cv2.imread('input.tif', 0)

img = cv2.resize(img, (605, 830))

blur = img.copy()

blur[blur>225] = 0

sobelx = cv2.Sobel(blur,cv2.CV_64F,1,0,ksize=3)

sobely = cv2.Sobel(blur,cv2.CV_64F,0,1,ksize=3)

sobel = np.sqrt( sobelx**2 + sobely**2)

sobel = (255 * sobel)/(sobel.max() - sobel.min())

sobel = sobel.astype(np.uint8)

sobel[sobel<40] = 0

sobel[sobel>40] = 255

Coords:

[[(31, 16), (240, 364)], [(253, 56), (600, 265)], [(254, 309),

(605, 520)], [(40, 456), (248, 803)], [(347, 557), (534, 803)]]