According to this D435i infosheet

RGB FOV(1920x1080): 64° × 41° × 77° (±3°) // HxVxD what to heck is D???

depth FOV(1280x720): 86° × 57° (±3°)

min depth(1280x720): 26cm

max depth: 3m

However I see no info about the depth frame if it is post processed with linear output or with perspective. You can infer it from images if the objects get smaller with distance it is still in perspective I do not know but will assume its the case.

your image is 848x480 so I will assume its just linearly scaled by 1/1.5 so the FOV is the same.

linearize depth into regular grid sampled points/mesh

so each pixel must be converted into 3D position relative to the camera. So simply cast ray from the camera focal point going through pixel and stop at its distance. The resulting position is the 3D position we want. Doing this for each point will result in 2D grid of connected points (surface mesh). However we do not know if the depth is euclid or perpendicular distance (cos corrected) so we need to try both combinations and chose the one which has better result (building are boxes and not pyramids...)

From the info I got at hand This is the best I could get from your input image:

so I took all the pixels from your image, converted x,y position into spherical longitude,latitude angles (linear interpolation from FOV of depth camera and image resolution) then converted pixel intensity into depth and converted that to Cartesian.

// depth -> PCL

for (y=0;y<ys;y++)

{

pd=(int*)depth->ScanLine[y]; // pointer to y-th scan line in depth image

b=depthFOVy*(((double(y)-depthy0)/double(ys-1))-0.5); // latitude

for (x=x3=0;x<xs;x++,x3+=3)

{

a=depthFOVx*(((double(x)-depthx0)/double(xs-1))-0.5); // longitude

r=pd[x]&255; // RGB from drepth (just single channel

r/=255.0; // linear scale

if (int(depthcfg&_DepthImage_invert_z)) r=1.0-r;

r=depthz0+(r*(depthz1-depthz0)); // range <z0,z1>

// spherical -> cartesian

p[0]=cos(a)*cos(b); // x

p[1]=sin(a)*cos(b); // y

p[2]= sin(b); // z

if (int(depthcfg&_DepthImage_cos_correct_z)) r/=p[0];

pnt[y][x3+0]=r*p[1];

pnt[y][x3+1]=r*p[2];

pnt[y][x3+2]=r*p[0];

}

}

where pnt[][] is 2D array of 3D points stored as consequent x,y,zand:

pcl.depthz0=0.8;

pcl.depthz1=1.00;

pcl.depthcfg=_DepthImage_invert_z|_DepthImage_cos_correct_z;

The image resolution is xs,ys.

for more info see:

compute normal for each point (surface) from its neighbors

simple cross product will do, however its important to use correct order of operands so the resulting normals points the same direction and not opposing ... otherwise we would need to deal with it later by using abs of dot result.

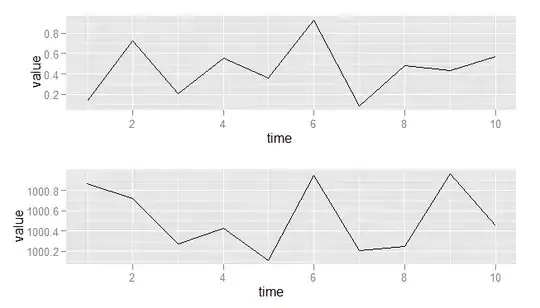

Do a histogram on the normals (aligned to limited set of directions)

Histogram is relation between some value of variable and its probability or occurrence. For example image histogram tells us how many pixels are colored with each color (range/bins). For our purposes flat areas (planes) should have the same normal (in your depth image is too much noise but I assume your gradient function that converts the depth into color is weirdly scaled and non linear emphasizing the noise as standard depth cameras does not have such big noises) so normals of a flat zones should be a very occurrent hence it should be represented by peaks in histogram. Firs we should convert 3D normal into single integer value (for simplifying the code) and also limit possible directions to only some fixed ones (to rule out noise and compress the output data size).

The normalized normal is 3D vector with coordinates in range <-1,+1> so we can convert that range to unsigned integer number of some bitwidth and stack up 3 of those into single integer. For example 5bit per coordinate:

normal = (nx,ny,nz)

nx = 31.0*(nx+1.0)*0.5; // <-1,+1> -> <0,31>

ny = 31.0*(nx+1.0)*0.5; // <-1,+1> -> <0,31>

nz = 31.0*(nx+1.0)*0.5; // <-1,+1> -> <0,31>

int ni = int(nx) + int(ny)<<5 + int(nz)<<10

so any direction will be converted to integer <0,2^15) so <0,32767> which is nicely manageable. So simply create some array int his[32768] and reset all its values to zero. Then for each pixel convert its normal to such integer ni and increment its counter his[ni]++; beware if you got big resolution of image you need to take in mind possible overflow of counters. So for 32 bit int the reslution must be less than 2^31 pixels... otherwise you use bigger bitdepth or add condition to avoid incrementing whet max value is reached already.

remove points with very low occurrence of their normal

this will allow us to ignore parts of image which are small surfaces and or are not flat enough. We can set those points to some specific normal like (0,0,0) or have a separate array with flags for this.

find big enough continuous area with the same normals

You can brute force search a box (size of your drone + some margin) in the data (so 2 nested for loops for position and another 2 for the test) and if all points have the same normal you found possible landing zone.

normal n1,n2 s are the "same" if:

dot(n1,n2)/(|n1|.|n2|) >= cos(margin)

where margin is allowed angular difference between normal in deg or rad depends on your cos implementation.

[Edit1] Your data is too noisy for normal approach

here image (after smoothing of data to reduce noise) showing the occurrence of individual normal directions (with this gradient) so blue is lowest occurrence, red is highest, green is in between...

As you can see the most occurrence has pixels out of range or invalid values (hard to say) and the top of boxes that where measured correctly. However most of the surface of your view has weird very big noise (in both amplitude and radius ... those blobs) which I never saw on depth images before. My bet is your conversion from raw depth to "color" gradient is non linear and emphasize noise (that is most likely also the reason why I had so hard time to reconstruct your scene back to 3D) + JPEG lossy compression artifacts.

So unless you provide clear equation for conversion between color of pixel and depth this is a no go. So instead you need different slower approach. I would do a window "brute force" search instead:

define a window

So your drone has some size so we need some rectangle of defined size (slightly bigger than your drone area projected on ground, how much depends on the landing trajectory and flight control stability & precision ...)

So we need some rectangle of size wx * wy in meters (or what ever units you reconstruct the scene with).

test "all" possible positions of window

so simply start at top left corner test if window of our pointcloud is fla enough and if yes you found your solution, if not test position slightly moved to the right ... until end of pointcloud reached. Then start again from left but moved slightly to the bottom. So this will be just 2 nested for loops incrementing by some value smaller than window size (does not need to be just pixel)

for each window location detect if the area is flat

so obtain avg position of each corner of window. That will define a plane so simply test all points in the window, by computing their perpendicular distance to the plane. if bigger than some threshold the area is not flat enough. If all points where OK then you found your landing zone.

Here a preview:

Of result of this approach. The red means invalid color (black on your original image) and green is the first found landing zone. I modified the code for the normal approach to do this so there is quite some code remnant that is not necessary anymore like color, normals etc ... You only need the pointcloud point positions... Here the relevant C++/OpenGL code for this:

First simple depth image to pointcloud class:

const int _DepthImage_invert_z =0x00000001; // brighter colros are closer

const int _DepthImage_cos_correct_z =0x00000002; // depth is perpendicular distance instead of euclid

const int _DepthImage_smooth =0x00000004; // average position to reduce noise

class DepthPoint

{

public:

double pos[3]; // position (x,y,z)

double nor[3]; // normal vector (x,y,z)

double col[3]; // color (r,g,b)

DepthPoint(){};

DepthPoint(DepthPoint& a) { *this=a; }

~DepthPoint(){};

DepthPoint* operator = (const DepthPoint *a) { *this=*a; return this; }

//DepthPoint* operator = (const DepthPoint &a) { ...copy... return this; }

};

class DepthImage

{

public:

// resoluton

int xs,ys; // resolution

// point cloud ys x xs

DepthPoint **pnt; // pnt[y][x] point for each pixel(x,y) of image

// depth camera properties

double depthFOVx,depthFOVy, // [rad] FOV angles

depthx0,depthy0, // [pixels] FOV offset

depthz0,depthz1; // [m] intensity <0,255> -> distance <z0,z1>

int depthcfg; // configuration flags

double error; // invalid depth

// color camera properties

double colorFOVx,colorFOVy, // [rad] FOV angles

colorx0,colory0; // [pixels] FOV offset

int colorcfg; // configuration flags

DepthImage()

{

pnt=NULL; _free();

depthFOVx=86.0*deg; colorFOVx=86.0*deg;

depthFOVy=57.0*deg; colorFOVy=57.0*deg;

depthx0=0.0; colorx0=0.0;

depthy0=0.0; colory0=0.0;

depthz0=0.0;

depthz1=6.0;

depthcfg=0; colorcfg=0;

}

DepthImage(DepthImage& a) { *this=a; }

~DepthImage() { _free(); }

DepthImage* operator = (const DepthImage *a) { *this=*a; return this; }

//DepthImage* operator = (const DepthImage &a) { ...copy... return this; }

void _free()

{

if (pnt)

{

if (pnt[0]) delete[] pnt[0];

delete[] pnt;

}

pnt=NULL; xs=ys=0;

}

void load(AnsiString _depth,AnsiString _color)

{

_free();

Graphics::TBitmap *depth,*color;

int i,x,y,*pd,*pc;

double p[3],q[3],a,b,r;

// load depth image

depth=new Graphics::TBitmap;

picture_load(depth,_depth,NULL);

depth->HandleType=bmDIB;

depth->PixelFormat=pf32bit;

xs=depth->Width;

ys=depth->Height;

// allocate PCL

pnt =new DepthPoint*[ ys];

pnt[0]=new DepthPoint [xs*ys];

for (y=0;y<ys;y++) pnt[y]=pnt[0]+(y*xs);

// depth -> PCL

error=depthz1*0.99;

for (y=0;y<ys;y++)

{

pd=(int*)depth->ScanLine[y]; // pointer to y-th scan line in depth image

b=depthFOVy*(((double(y)-depthy0)/double(ys-1))-0.5); // latitude

for (x=0;x<xs;x++)

{

a=depthFOVx*(((double(x)-depthx0)/double(xs-1))-0.5); // longitude

r=pd[x]&255; // RGB from drepth (just single channel

r/=255.0; // linear scale

if (int(depthcfg&_DepthImage_invert_z)) r=1.0-r;

r=depthz0+(r*(depthz1-depthz0)); // range <z0,z1>

// spherical -> cartesian

p[0]=cos(a)*cos(b); // x

p[1]=sin(a)*cos(b); // y

p[2]= sin(b); // z

if (int(depthcfg&_DepthImage_cos_correct_z)) r/=p[0];

// scale and reorder coordinates to match my view

pnt[y][x].pos[0]=r*p[1];

pnt[y][x].pos[1]=r*p[2];

pnt[y][x].pos[2]=r*p[0];

}

}

// smooth positions a bit to reduce noise (FIR averaging smooth/blur filter)

if (int(depthcfg&_DepthImage_smooth))

for (i=0;i<10;i++)

{

for (y=1;y<ys;y++)

for (x=1;x<xs;x++)

{

vector_mul(p,pnt[y][x].pos,3.0);

vector_add(p,p,pnt[y][x-1].pos);

vector_add(p,p,pnt[y-1][x].pos);

vector_mul(pnt[y][x].pos,p,0.2);

}

for (y=ys-1;y>0;y--)

for (x=xs-1;x>0;x--)

{

vector_mul(p,pnt[y-1][x-1].pos,3.0);

vector_add(p,p,pnt[y-1][x].pos);

vector_add(p,p,pnt[y][x-1].pos);

vector_mul(pnt[y][x].pos,p,0.2);

}

}

// compute normals

for (y=1;y<ys;y++)

for (x=1;x<xs;x++)

{

vector_sub(p,pnt[y][x].pos,pnt[y][x-1].pos);// p = pnt[y][x] - pnt[y][x-1]

vector_sub(q,pnt[y][x].pos,pnt[y-1][x].pos);// q = pnt[y][x] - pnt[y-1][x]

vector_mul(p,q,p); // p = cross(q,p)

vector_one(pnt[y][x].nor,p); // nor[y][x] = p/|p|

}

// copy missing edge normals

for (y=1;y<ys;y++) vector_copy(pnt[y][0].nor,pnt[y][1].nor);

for (x=0;x<xs;x++) vector_copy(pnt[0][x].nor,pnt[1][x].nor);

// set color

for (y=0;y<ys;y++)

for (x=0;x<xs;x++)

{

DepthPoint *p=&pnt[y][x];

vector_ld(p->col,0.5,0.5,0.5);

if (p->pos[2]>=error) vector_ld(p->col,1.0,0.0,0.0); // invalid depths as red

}

delete depth;

}

bool find_flat_rect(double* &p0,double* &p1,double* &p2,double* &p3,double wx,double wy,double dmax)

{

int i,x,y,x0,y0,x1,y1,dx=1,dy=1;

double d,p[3],n[3];

// whole image

for (y0=0;y0<ys;y0+=dx)

for (x0=0;x0<xs;x0+=dy)

{

dx=1; dy=1;

// first coorner

p0=pnt[y0][x0].pos; if (p0[2]>=error) continue;

// find next coorner wx distant to p0

for (x1=x0+1;x1<xs;x1++)

{

p1=pnt[y0][x1].pos; if (p1[2]>=error) continue;

vector_sub(p,p1,p0); // p = p1-p0

d=vector_len(p); // d = |p|

if (d>=wx) break;

} if (x1>=xs){ x0=xs; continue; }

// find next coorner wy distant to p0

for (y1=y0+1;y1<ys;y1++)

{

p3=pnt[y1][x0].pos; if (p3[2]>=error) continue;

vector_sub(p,p3,p0); // p = p3-p0

d=vector_len(p); // d = |p|

if (d>=wy) break;

} if (y1>=ys){ p0=p1=p2=p3=NULL; return false; }

// diagonal coorner

p2=pnt[y1][x1].pos;

// window position increments are 1/4 of window size

dx=1+((x1-x0)>>2);

dy=1+((y1-y0)>>2);

// plane normal

vector_sub(p,p1,p0); // p = p1-p0

vector_sub(n,p3,p0); // n = p3-p0

vector_mul(n,n,p); // n = cross(n,p)

vector_mul(n,n); // n = n/|n|

// test distances to plane

i=1;

for (y=y0;y<=y1;y++)

for (x=x0;x<=x1;x++)

{

if (pnt[y][x].pos[2]>=error) continue;

vector_sub(p,pnt[y][x].pos,p0); // p = pnt[y][x] - p0

d=fabs(vector_mul(p,n)); // d = |dot(p,n)| ... perpendicular distance

if (d>dmax){ i=0; x=x1+1; y=y1+1; break; }

}

if (i) return true;

}

p0=p1=p2=p3=NULL;

return false;

}

void gldraw()

{

int x,y;

DepthPoint *p;

for (y=1;y<ys;y++)

{

glBegin(GL_QUAD_STRIP);

for (x=0;x<xs;x++)

{

p=&pnt[y-1][x];

glColor3dv(p->col);

glNormal3dv(p->nor);

glVertex3dv(p->pos);

p=&pnt[y][x];

glColor3dv(p->col);

glNormal3dv(p->nor);

glVertex3dv(p->pos);

}

glEnd();

}

glColor3f(1.0,1.0,1.0);

}

};

//---------------------------------------------------------------------------

And usage:

DepthImage pcl; // pointcloud

double *q0,*q1,*q2,*q3; // pointers to landing zone rectangle points

pcl.depthz0=0.8; // some constants for reconstruction

pcl.depthz1=1.00;

pcl.depthcfg=

_DepthImage_invert_z

|_DepthImage_cos_correct_z

;

pcl.load("depth.jpg",""); // this creates the pointcloud from image

pcl.find_flat_rect(q0,q1,q2,q3,0.40,0.25,0.005); // this finds a rectangle of 0.4x0.25 m size and 0.005m deviation from flat surface (return true if found)

// the q0,q1,q2,q3 are pointers to 3D points of the found rectangle or NULL if no such has been found

The image loader is from mine OpenGL engine (partially based on VCL and PNGDelphi) so you need to use whatever you got in your environment.

The only important stuff (apprat fromthe 3D reconstruction) is the function find_flat_rect which works exactly as described in this approach.

The only thing left to add is a test that landing zone is not too big slope (by dot product between plane normal n and down vector (from accelerometer or gyros). In case your drone is always in horizontal orientation you can use z axis instead.

Here the function that converts 3D point into 2D depth image cooridnates:

void DepthImage::project(double &x,double &y,double* p) // p[3] -> (x,y) ... 3D to 2D perspective projection

{

x=atan2(p[0],p[2])/depthFOVx;

y=atan2(p[1],p[2])/depthFOVy;

if (int(depthcfg&_DepthImage_cos_correct_z))

y*=(double(ys)*depthFOVx)/(depthFOVy*double(xs));

x=(x+0.5)*xs;

y=(y+0.5)*ys;

}

However due to not perfect 3D reconstruction (due unknown depth encoding used) is this not very accurate so the result is slightly distorted. Beware results might be slightly out of image bounds so you need to to limit it to <0,xs) and <0,ys) if you want to use it for pixel access ...