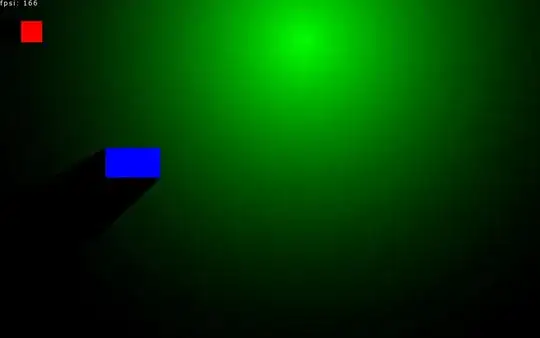

I am trying to build a graph which shows accuracy and loss curves using Matplotlib but it is not displaying curves rather it just displays graph and its x-axis starts from minus value why not 0 .

Code:

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

max_accuracy=0.70

for i in range(10):

print("Epoch no",i+1)

history = model.fit(train_data,train_labels, epochs=1, batch_size=32,verbose=1,validation_data=(test_data,test_labels))

if history.history['val_accuracy'][0]>max_accuracy:

print("New Best model found above")

max_accuracy=history.history['val_accuracy'][0]

model.save('CNN-logo.h5')

model=tf.keras.models.load_model('CNN-logo.h5')

[train_loss, train_accuracy] = model.evaluate(train_data, train_labels)

print("Evaluation result on Train Data : Loss = {}, accuracy = {}".format(train_loss, train_accuracy))

[test_loss, test_acc] = model.evaluate(test_data, test_labels)

print("Evaluation result on Test Data : Loss = {}, accuracy = {}".format(test_loss, test_acc))

#Plot the loss curves

plt.figure(figsize=[8,6])

plt.plot(history.history['loss'],'r',linewidth=3.0)

plt.plot(history.history['val_loss'],'b',linewidth=3.0)

plt.legend(['Training loss', 'Validation Loss'],fontsize=18)

plt.xlabel('Epochs ',fontsize=16)

plt.ylabel('Loss',fontsize=16)

plt.title('Loss Curves',fontsize=1)

plt.show()

#Plot the Accuracy Curves

plt.figure(figsize=[8,6])

plt.plot(history.history['accuracy'],'r',linewidth=3.0)

plt.plot(history.history['val_accuracy'],'b',linewidth=3.0)

plt.legend(['Training Accuracy', 'Validation Accuracy'],fontsize=18)

plt.xlabel('Epochs ',fontsize=16)

plt.ylabel('Accuracy',fontsize=16)

plt.title('Accuracy Curves',fontsize=16)

plt.show()