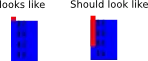

When I use Pytesseract to recognise the text in this image, Pytesseract returns 7A51k but the text in this image is 7,451k.

How can I fix this problem with code instead of providing a clearer source image?

my code

import pytesseract as pytesseract

from PIL import Image

pytesseract.pytesseract.tesseract_cmd = 'D:\\App\\Tesseract-OCR\\tesseract'

img = Image.open("captured\\amount.png")

string = pytesseract.image_to_string(image=img, config="--psm 10")

print(string)