Here is my code. I've scraped the data from website but it just returns to me one long list.

How do I manipulate the data to fall under the headings? I'm getting the current error message:

ValueError: 8 columns passed, passed data had 2648 columns.

Any help is greatly appreciated.

from selenium import webdriver

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

import pandas as pd

from pandas import DataFrame

import html5lib

url = "https://www.loudnumber.com/screeners/cashflow"

driver = webdriver.Chrome(ChromeDriverManager().install())

driver.get(url)

time.sleep(5)

html = driver.page_source

soup = BeautifulSoup(html,'html.parser')

table = soup.find('table')

l = []

for tr in table:

td = table.find_all('td') #cols

rows = [table.text.strip() for tr in td if tr.text.strip()]

if rows:

l.append(rows)

driver.quit()

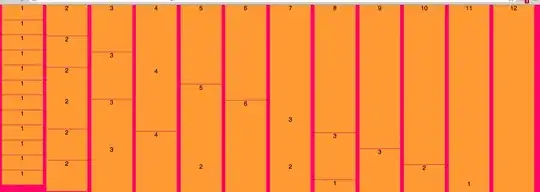

df = pd.DataFrame(list(l), columns=["Ticker","Company","Industry","Current Price"

,"Instrinsic Value","IV to CP ratio",

"Dividend","Dividend Yield"])

print(df)