I get this cropped image from my pdf:

After preprocessing this is how I feed it to Tesseract OCR

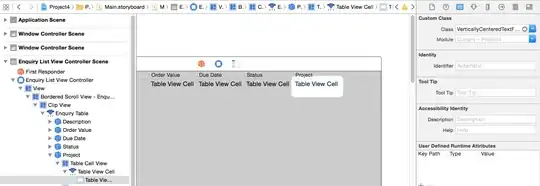

text = pytesseract.image_to_string(img, lang='eng')

But the ocr'ed text is empty.

Edit:

I load the full image and crop it to this. Once it is cropped I apply sharpening filter to it and then remove salt and pepper

pages = convert_from_path("../data/2.pdf", fmt='JPEG',

poppler_path=r"D:\poppler-0.68.0\bin")

reader = easyocr.Reader(['en']) # need to run only once to load model into memory

for page in pages:

page.save('image.jpg', 'JPEG')

image = cv2.imread('image.jpg')

img = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

img = img[cord[2]:cord[3], cord[0]:cord[1]]

kernel = np.array([[-1,-1,-1], [-1,9,-1], [-1,-1,-1]])

img = cv2.filter2D(img, -1, kernel)

img = cv2.medianBlur(img, 3)

text = pytesseract.image_to_string(img)

This image is a part of PDF. PDF is converted to jpg and then loaded again and then this section is cropped out by giving BB coordinates.

Edit:

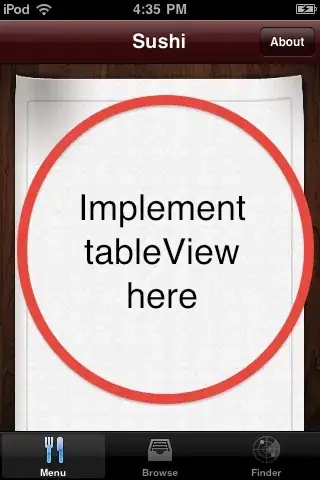

Using the example below this is the output after preprocessing:

But the ocr'ed text output it prints is still off:

AQ@O FCI