I'm creating an external table using a CSV stored in an Azure Data Lake Storage and populating the table using Polybase in SQL Server.

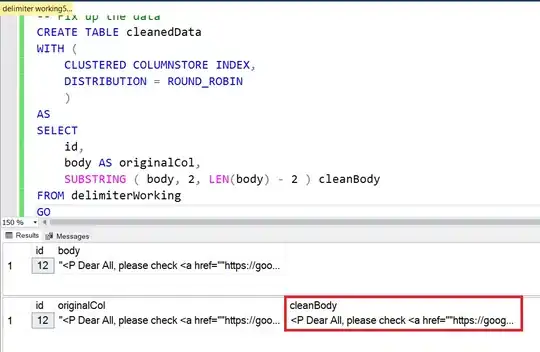

However, I ran into this problem and figured it may be due to the fact that in one particular column there are double quotes present within the string, and the string delimiter has been specified as " in Polybase (STRING_DELIMITER = '"').

HdfsBridge::recordReaderFillBuffer - Unexpected error encountered filling record reader buffer: HadoopExecutionException: Could not find a delimiter after string delimiter

Example:

I have done quite an extensive research in this and found that this issue has been around for years but yet to see any solutions given.

Any help will be appreciated.