I have a huge dataset of csv files having a volume of around 200GB. I don't know the total number of records in the dataset. I'm using make_csv_dataset to create a PreFetchDataset generator.

I'm facing problem when Tensorflow complains to specify steps_per_epoch and validation_steps for infinite dataset....

How can I specify the steps_per_epoch and validation_steps?

Can I pass these parameters as the percentage of total dataset size?

Can I somehow avoid these parameters as I want my whole dataset to be iterated for each epoch?

I think this SO thread answer the case when we know to total number of data records in advance.

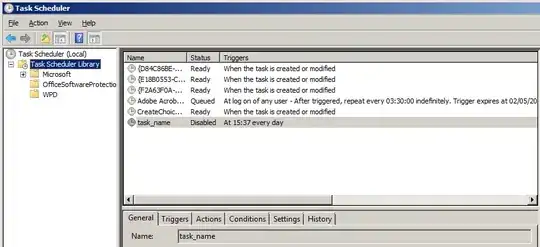

Here is a screenshot from documentation. But I'm not getting it properly.

What does the last line mean?