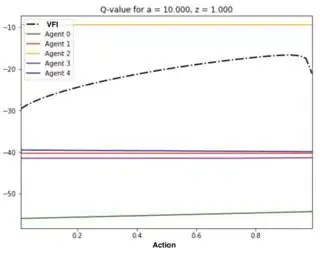

I have faced this problem and changing the model, the networks, number of episodes, and all the other parameters such as learning rate to fit your environment may solve the problem. However, it is not performing well in some environments. So, I changed to A2C because it shows better results.

This is the DDPG model and parameters that I got better results:

import os

import tensorflow as tf

import numpy as np

from collections import deque

label = 'DDPG_model'

rand_unif = tf.keras.initializers.RandomUniform(minval=-3e-3,maxval=3e-3)

import var

winit = tf.contrib.layers.xavier_initializer()

binit = tf.constant_initializer(0.01)

class CriticNetwork(object):

def __init__(self, sess, s_dim, a_dim, learning_rate=1e-3, tau=1e-3, gamma=0.995, hidden_unit_size=64):

self.sess = sess

self.s_dim = s_dim

self.a_dim = a_dim

self.hidden_unit_size = hidden_unit_size

self.learning_rate = learning_rate

self.tau = tau

self.gamma = gamma

self.seed = 0

# Create the critic network

self.inputs, self.action, self.out = self.buil_critic_nn(scope='critic')

self.network_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='critic')

# Target Network

self.target_inputs, self.target_action, self.target_out = self.buil_critic_nn(scope='target_critic')

self.target_network_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='target_critic')

# Op for periodically updating target network with online network

# weights with regularization

self.update_target_network_params = [self.target_network_params[i].assign(

tf.multiply(self.network_params[i], self.tau) + tf.multiply(self.target_network_params[i], 1. - self.tau))

for i in range(len(self.target_network_params))]

# Network target (y_i)

self.predicted_q_value = tf.placeholder(tf.float32, [None, 1])

# Define loss and optimization Op

self.loss = tf.losses.huber_loss(self.out,self.predicted_q_value, delta=0.5)

self.optimize = tf.train.AdamOptimizer(

self.learning_rate).minimize(self.loss)

if var.opt == 2:

self.optimize = tf.train.RMSPropOptimizer(learning_rate=var.learning_rate, momentum=0.95,

epsilon=0.01).minimize(self.loss)

elif var.opt == 0:

self.optimize = tf.train.GradientDescentOptimizer(learning_rate=var.learning_rate).minimize(self.loss)

# Get the gradient of the net w.r.t. the action.

# For each action in the minibatch (i.e., for each x in xs),

# this will sum up the gradients of each critic output in the minibatch

# w.r.t. that action. Each output is independent of all

# actions except for one.

self.action_grads = tf.gradients(self.out, self.action)

def buil_critic_nn(self, scope='network'):

hid1_size = self.hidden_unit_size

hid2_size = self.hidden_unit_size

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

state = tf.placeholder(name='c_states', dtype=tf.float32, shape=[None, self.s_dim])

action = tf.placeholder(name='c_action', dtype=tf.float32, shape=[None, self.a_dim])

net = tf.concat([state, action], 1)

net1 = tf.layers.dense(inputs=net, units=1000, activation="linear",

kernel_initializer=tf.zeros_initializer(),

name='anet1')

net2 = tf.layers.dense(inputs=net1, units=520, activation="relu", kernel_initializer=tf.zeros_initializer(),

name='anet2')

net3 = tf.layers.dense(inputs=net2, units=220, activation="linear", kernel_initializer=tf.zeros_initializer(),

name='anet3')

out = tf.layers.dense(inputs=net3, units=1,

kernel_initializer=tf.zeros_initializer(),

name='anet_out')

out = (tf.nn.softsign(out))

return state, action, out

def train(self, inputs, action, predicted_q_value):

return self.sess.run([self.out, self.optimize], feed_dict={

self.inputs: inputs,

self.action: action,

self.predicted_q_value: predicted_q_value

})

def predict(self, inputs, action):

return self.sess.run(self.out, feed_dict={

self.inputs: inputs,

self.action: action

})

def predict_target(self, inputs, action):

return self.sess.run(self.target_out, feed_dict={

self.target_inputs: inputs,

self.target_action: action

})

def action_gradients(self, inputs, actions):

return self.sess.run(self.action_grads, feed_dict={

self.inputs: inputs,

self.action: actions

})

def update_target_network(self):

self.sess.run(self.update_target_network_params)

def return_loss(self, predict, inputs, action):

return self.sess.run(self.loss ,feed_dict={

self.predicted_q_value: predict, self.inputs: inputs,

self.action: action})

class ActorNetwork(object):

def __init__(self, sess, s_dim, a_dim,lr=1e-4, tau=1e-3, batch_size=64,action_bound=1):

self.sess = sess

self.s_dim = s_dim

self.a_dim = a_dim

self.act_min = 0

self.act_max = 51

self.hdim = 64

self.lr = lr

self.tau = tau # Parameter for soft update

self.batch_size = batch_size

self.seed = 0

self.action_bound = action_bound

# Actor Network

self.inputs, self.out , self.scaled_out = self.create_actor_network(scope='actor')

self.network_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='actor')

# Target Network

self.target_inputs, self.target_out, self.target_scaled_out = self.create_actor_network(scope='target_actor')

self.target_network_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='target_actor')

# Parameter Updating Operator

self.update_target_network_params = [self.target_network_params[i].assign(

tf.multiply(self.network_params[i], self.tau) + tf.multiply(self.target_network_params[i], 1. - self.tau))

for i in range(len(self.target_network_params))]

self.action_gradient = tf.placeholder(tf.float32, [None, self.a_dim])

# Gradient will be provided by the critic network

self.actor_gradients = tf.gradients(self.scaled_out, self.network_params, -self.action_gradient)

# Combine the gradients here

self.unnormalized_actor_gradients = tf.gradients(self.out, self.network_params, -self.action_gradient)

# self.actor_gradients = list(map(lambda x: x/self.batch_size, self.unnormalized_actor_gradients))

self.actor_gradients = [unnz_actor_grad / self.batch_size for unnz_actor_grad in

self.unnormalized_actor_gradients]

# Optimizer

self.optimize = tf.train.AdamOptimizer(-self.lr).apply_gradients(zip(self.actor_gradients, self.network_params))

if var.opt == 2:

self.optimize = tf.train.RMSPropOptimizer(learning_rate=var.learning_rate, momentum=0.95, epsilon=0.01). \

apply_gradients(zip(self.unnormalized_actor_gradients, self.network_params))

elif var.opt == 0:

self.optimize = tf.train.GradientDescentOptimizer(learning_rate=var.learning_rate). \

apply_gradients(zip(self.unnormalized_actor_gradients, self.network_params))

self.num_trainable_vars = len(self.network_params) + len(self.target_network_params)

def create_actor_network(self, scope='network'):

hid1_size = self.hdim

hid2_size = self.hdim

with tf.variable_scope(scope, reuse=tf.AUTO_REUSE):

state = tf.placeholder(name='a_states', dtype=tf.float32, shape=[None, self.s_dim])

net1 = tf.layers.dense(inputs=state, units=1500, activation="linear", kernel_initializer=tf.zeros_initializer(),

name='anet1')

net2 = tf.layers.dense(inputs=net1, units=1250, activation="relu",kernel_initializer=tf.zeros_initializer(), name='anet2')

out = tf.layers.dense(inputs=net2, units=self.a_dim, kernel_initializer=tf.zeros_initializer(),

name='anet_out')

out=(tf.nn.sigmoid(out))

scaled_out = tf.multiply(out, self.action_bound)

# out = tf.nn.tanh(out)

return state, out,scaled_out

def train(self, inputs, a_gradient):

self.sess.run(self.optimize, feed_dict={

self.inputs: inputs,

self.action_gradient: a_gradient

})

def predict(self, inputs):

return self.sess.run(self.out, feed_dict={

self.inputs: inputs

})

def predict_target(self, inputs):

return self.sess.run(self.target_out, feed_dict={

self.target_inputs: inputs

})

def update_target_network(self):

self.sess.run(self.update_target_network_params)

def get_num_trainable_vars(self):

return self.num_trainable_vars

def save_models(self, sess, model_path):

""" Save models to the current directory with the name filename """

current_dir = os.path.dirname(os.path.realpath(__file__))

model_path = os.path.join(current_dir, "DDPGmodel" + str(var.n_vehicle) + "/" + model_path)

saver = tf.train.Saver(max_to_keep=var.n_vehicle * var.n_neighbor)

if not os.path.exists(os.path.dirname(model_path)):

os.makedirs(os.path.dirname(model_path))

saver.save(sess, model_path, write_meta_graph=True)

def save(self):

print('Training Done. Saving models...')

model_path = label + '/agent_'

print(model_path)

self.save_models(self.sess, model_path)

def load_models(self, sess, model_path):

""" Restore models from the current directory with the name filename """

dir_ = os.path.dirname(os.path.realpath(__file__))

saver = tf.train.Saver(max_to_keep=var.n_vehicle * var.n_neighbor)

model_path = os.path.join(dir_, "DDPGmodel" + str(var.n_vehicle) + "/" + model_path)

saver.restore(self.sess, model_path)

def load(self,sess):

print("\nRestoring the model...")

model_path = label + '/agent_'

self.load_models(sess, model_path)

Parameters

parser = argparse.ArgumentParser(description='provide arguments for DDPG agent')

# agent parameters

parser.add_argument('--actor-lr', help='actor network learning rate', default=var.learning_rateMADDPG)

parser.add_argument('--critic-lr', help='critic network learning rate', default=var.learning_rateMADDPG_c2)

parser.add_argument('--gamma', help='discount factor for critic updates', default=0.99)

parser.add_argument('--tau', help='soft target update parameter', default=0.001)

parser.add_argument('--buffer-size', help='max size of the replay buffer', default=100000)

parser.add_argument('--minibatch-size', help='size of minibatch for minibatch-SGD', default=32)

# run parameters

parser.add_argument('--env', help='choose the gym env- tested on {Pendulum-v0}', default='Pendulum-v0')

parser.add_argument('--random-seed', help='random seed for repeatability', default=1234)

parser.add_argument('--max-episodes', help='max num of episodes to do while training', default=var.number_eps)

parser.add_argument('--max-episode-len', help='max length of 1 episode', default=100)

parser.add_argument('--render-env', help='render the gym env', action='store_true')

parser.add_argument('--use-gym-monitor', help='record gym results', action='store_true')

parser.add_argument('--monitor-dir', help='directory for storing gym results', default='./results/gym_ddpg')

parser.add_argument('--summary-dir', help='directory for storing tensorboard info', default='./results/tf_ddpg')

To Select the Action use one of these mathods:

# action = np.argmax(actions)

action = np.random.choice(np.arange(len(actions[0])), p=actions[0])

You can find different papers talk about this problem. For example in the paper [1-5], the authors show some shortcomings of DDPG and shows why the ddpg algorithm fails to achieve convergence

- The DDPG is designed for settings with continuous and often high-dimensional action spaces and the problem becomes very sharp as the number of agents increases.

- The second problem comes from the inability of DDPG to handle variability on the set of agents.

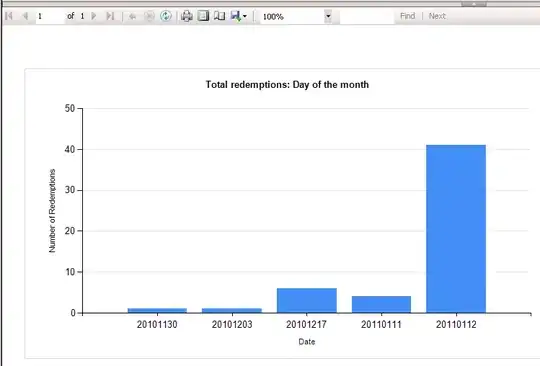

- The settings in real-life platforms however are quite dynamic, with agents arriving and leaving or their costs varying over time, and for an allocation algorithm to be applicable, it should be able to handle such variability.

- DDPG needs more steps to coverage.

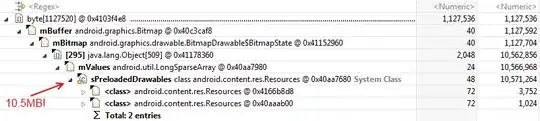

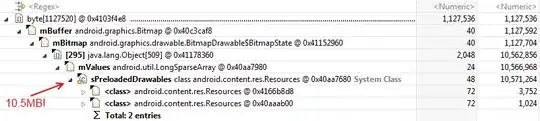

- Changing the result shows that the algorithms that used the replay memory do not perform well in a changing environment because the stability problem where it is considered as one of the main problems when there are feeding observations into the model, the model cannot generalize properly from past experiences and these experiences are used inefficiently. In addition, these problems are raised where the increasing number of agents expands the state-action space exponentially.

1- Cai, Q., Filos-Ratsikas, A., Tang, P., & Zhang, Y. (2018, April). Reinforcement Mechanism Design for e-commerce. In Proceedings of the 2018 World Wide Web Conference (pp. 1339-1348).

2- Iqbal, S., & Sha, F. (2019, May). Actor-attention-critic for multi-agent reinforcement learning. In International Conference on Machine Learning (pp. 2961-2970). PMLR.

3- Foerster, J., Nardelli, N., Farquhar, G., Afouras, T., Torr, P. H., Kohli, P., & Whiteson, S. (2017, August). Stabilising experience replay for deep multi-agent reinforcement learning. In Proceedings of the 34th International Conference on Machine Learning-Volume 70 (pp. 1146-1155). JMLR. org.

4- Hou, Y., & Zhang, Y. (2019). Improving DDPG via Prioritized Experience Replay. no. May.

5- Wang, Y., & Zhang, Z. (2019, November). Experience Selection in Multi-agent Deep Reinforcement Learning. In 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI) (pp. 864-870). IEEE.