See the reproducible piece of code;

require(tidyverse); # To enable the use of %>%

# Generate and save data;

# With @N = 1000, and 6 variables

# and save locally.

write.table(

x = caret::twoClassSim(

n = 1000,

linearVars = 0

),

file = "data.csv",

row.names = F

)

# Read data randomly; ####

# with fread and shell shuffling from data.table

system.time(

dt <- data.table::fread(

"shuf -n 100 data.csv"

)

)

colnames(

dt

)

# There is no column names;

# So - we read the first line to extract

# colum names.

tmpColname <- data.table::fread(

"data.csv",

nrow = 0

) %>% colnames()

# And read data;

system.time(

dt <- data.table::fread(

"shuf -n 100 data.csv"

)

)

# set colum names;

print(

colnames(dt) <- tmpColname

)

# Reading all data; #####

# For system time comparision

system.time(

dt <- data.table::fread(

"data.csv"

)

)

My problem

- How do I extract the column names without reading the data twice, such that I can get rid of this piece of code while reading random indices of the data:

# There is no column names;

# So - we read the first line to extract

# colum names.

tmpColname <- data.table::fread(

"data.csv",

nrow = 0

) %>% colnames()

# set colum names;

print(

colnames(dt) <- tmpColname

)

- How do I speed up the following piece of code:

# Read data randomly;

# with fread and shell shuffling from data.table

system.time(

dt <- data.table::fread(

"shuf -n 100 data.csv"

)

)

Which takes 0.002 seconds to read, as opposed to 0.001 for reading the entire data set? Im aware that the difference is minuscule - but clearly it scales with the number of rows in the data.

What I tried to do:

I changed the number of nThreads in fread, however this didn't really change anything as seen by the output of microbenchmark;

# Testing loading times using Micro-Benchmark; ####

# Set an equal seed; and nThread as argument

require(microbenchmark)

# test as a function of nThreads;

set.seed(1903)

readingTime.shuf <- lapply(

X = 1:32,

FUN = function(x){

benchmark <- microbenchmark(

data.table::fread(

"shuf -n 100 data.csv",

nThread = x

), unit = "ms"

)

# Return average run time

mean(benchmark$time)

}

)

set.seed(1903)

readingTime.baseline <- lapply(

X = 1:32,

FUN = function(x){

benchmark <- microbenchmark(

data.table::fread(

"data.csv",

nThread = x

), unit = "ms"

)

# Return average run time

mean(benchmark$time)

}

)

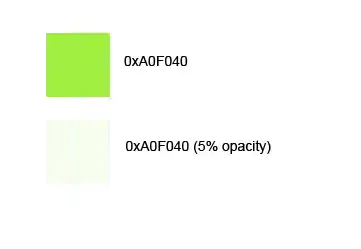

Which yields the following output (Solid line is shuf -n 100 data.csv);

After trying r2evans' solutions, and running a microbenchmark on a 100MB-dataset it appears that option 2 is even faster than reading the entire data set;

Unit: milliseconds

expr min lq mean median uq max neval

option 0 229.0648 272.6154 290.8824 293.1407 314.5152 507.2386 1000

option 1 202.7123 235.4226 246.9950 246.4003 259.4866 309.8934 1000

option 2 190.2417 220.1596 231.3932 230.4496 243.1495 277.7367 1000

option 3 200.1050 236.8651 248.5814 248.4387 260.8436 319.0361 1000

Here option 0 is reading the entire data set.