I have a three output network with three defined custom loss functions and during training, Keras returns three loss values as I would expect but also an additional value which I suspect is a combined loss. How is it defined or what does it represent? I didn't find anything in the documentation, clarification is appreciated.

Also if it really is combined loss, does it just serve as an indicator or does it affect gradients in any way?

implementation example

losses = [my_loss(config1), my_loss(config2), my_loss(config3)]

model.compile(optimizer=optimizer, loss=losses, run_eagerly=False)

model.fit(...) # training returns 4 loss values - 'loss', 'my_loss1', 'my_loss2' and 'my_loss3'

EDIT:

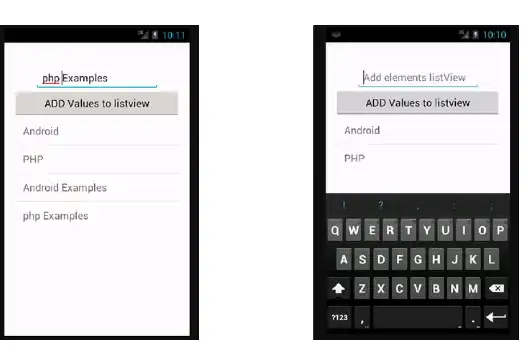

Example losses training curves. It's clear that sum of my losses is not the combined loss. And I do not use any weights in compile method.