I have a spectrum that I want to subtract a baseline from. The spectrum data are:

1.484043000000000001e+00 1.121043091000000004e+03

1.472555999999999976e+00 1.140899658000000045e+03

1.461239999999999872e+00 1.135047851999999921e+03

1.450093000000000076e+00 1.153286499000000049e+03

1.439112000000000169e+00 1.158624877999999853e+03

1.428292000000000117e+00 1.249718872000000147e+03

1.417629999999999946e+00 1.491854857999999922e+03

1.407121999999999984e+00 2.524922362999999677e+03

1.396767000000000092e+00 4.102439940999999635e+03

1.386559000000000097e+00 4.013319579999999860e+03

1.376497999999999999e+00 3.128252441000000090e+03

1.366578000000000070e+00 2.633181152000000111e+03

1.356797999999999949e+00 2.340077147999999852e+03

1.347154999999999880e+00 2.099404540999999881e+03

1.337645999999999891e+00 2.012083983999999873e+03

1.328268000000000004e+00 2.052154540999999881e+03

1.319018999999999942e+00 2.061067871000000196e+03

1.309895999999999949e+00 2.205770507999999609e+03

1.300896999999999970e+00 2.199266602000000148e+03

1.292019000000000029e+00 2.317792235999999775e+03

1.283260000000000067e+00 2.357031494000000293e+03

1.274618000000000029e+00 2.434981689000000188e+03

1.266089999999999938e+00 2.540746337999999923e+03

1.257675000000000098e+00 2.605709472999999889e+03

1.249370000000000092e+00 2.667244141000000127e+03

1.241172999999999860e+00 2.800522704999999860e+03

I've taken only every 20th data point from the actual data file, but the general shape is preserved.

import matplotlib.pyplot as plt

share = the_above_array

plt.plot(share)

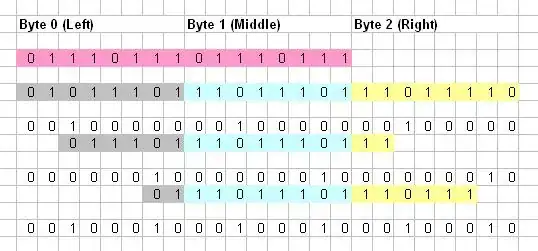

Original_spectrum

There is a clear tail in around the high x values. Assume the tail is an artifact and needs to be removed. I've tried solutions using the ALS algorithm by P. Eilers, a rubberband approach, and the peakutils package, but these end up subtracting the tail and creating a rise around the low x values or not creating a suitable baseline.

ALS algorithim, in this example I am using lam=1E6 and p=0.001; these were the best parameters I was able to manually find:

# ALS approach

from scipy import sparse

from scipy.sparse.linalg import spsolve

def baseline_als(y, lam, p, niter=10):

L = len(y)

D = sparse.csc_matrix(np.diff(np.eye(L), 2))

w = np.ones(L)

for i in range(niter):

W = sparse.spdiags(w, 0, L, L)

Z = W + lam * D.dot(D.transpose())

z = spsolve(Z, w*y)

w = p * (y > z) + (1-p) * (y < z)

return z

baseline = baseline_als(share[:,1], 1E6, 0.001)

baseline_subtracted = share[:,1] - baseline

plt.plot(baseline_subtracted)

Rubberband approach:

# rubberband approach

from scipy.spatial import ConvexHull

def rubberband(x, y):

# Find the convex hull

v = ConvexHull(share).vertices

# Rotate convex hull vertices until they start from the lowest one

v = np.roll(v, v.argmax())

# Leave only the ascending part

v = v[:v.argmax()]

# Create baseline using linear interpolation between vertices

return np.interp(x, x[v], y[v])

baseline_rubber = rubberband(share[:,0], share[:,1])

intensity_rubber = share[:,1] - baseline_rubber

plt.plot(intensity_rubber)

peakutils package:

# peakutils approach

import peakutils

baseline_peakutils = peakutils.baseline(share[:,1])

intensity_peakutils = share[:,1] - baseline_peakutils

plt.plot(intensity_peakutils)

Are there any suggestions, aside from masking the low x value data, for constructing a baseline and subtracting the tail without creating a rise in the low x values?