Q1.

I'm trying to make my custom autograd function with pytorch.

But I had a problem with making analytical back propagation with y = x / sum(x, dim=0)

where size of tensor x is (Height, Width) (x is 2-dimensional).

Here's my code

class MyFunc(torch.autograd.Function):

@staticmethod

def forward(ctx, input):

ctx.save_for_backward(input)

input = input / torch.sum(input, dim=0)

return input

@staticmethod

def backward(ctx, grad_output):

input = ctx.saved_tensors[0]

H, W = input.size()

sum = torch.sum(input, dim=0)

grad_input = grad_output * (1/sum - input*1/sum**2)

return grad_input

I used (torch.autograd import) gradcheck to compare Jacobian matrix,

from torch.autograd import gradcheck

func = MyFunc.apply

input = (torch.randn(3,3,dtype=torch.double,requires_grad=True))

test = gradcheck(func, input)

and the result was

Please someone help me to get correct back propagation result

Thanks!

Q2.

Thanks for answers!

Because of your help, I could implement back propagation in case of (H,W) tensor.

However, while I implemented back propagation in case of (N,H,W) tensor, I got a problem. I think the problem would be initializing new tensor.

Here's my new code

import torch

import torch.nn as nn

import torch.nn.functional as F

class MyFunc(torch.autograd.Function):

@staticmethod

def forward(ctx, input):

ctx.save_for_backward(input)

N = input.size(0)

for n in range(N):

input[n] /= torch.sum(input[n], dim=0)

return input

@staticmethod

def backward(ctx, grad_output):

input = ctx.saved_tensors[0]

N, H, W = input.size()

I = torch.eye(H).unsqueeze(-1)

sum = input.sum(1)

grad_input = torch.zeros((N,H,W), dtype = torch.double, requires_grad=True)

for n in range(N):

grad_input[n] = ((sum[n] * I - input[n]) * grad_output[n] / sum[n]**2).sum(1)

return grad_input

Gradcheck code is

from torch.autograd import gradcheck

func = MyFunc.apply

input = (torch.rand(2,2,2,dtype=torch.double,requires_grad=True))

test = gradcheck(func, input)

print(test)

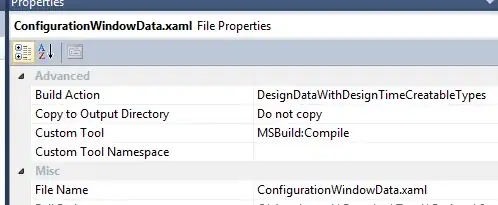

and result is enter image description here

I don't know why the error occurs...

Your help will be very helpful for me to implement my own convolutional network.

Thanks! Have a nice day.