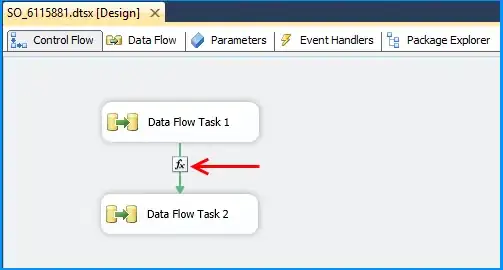

I have Azure Data Factory copy activity which loads parquet files to Azure Synapse. Sink is configured as shown below:

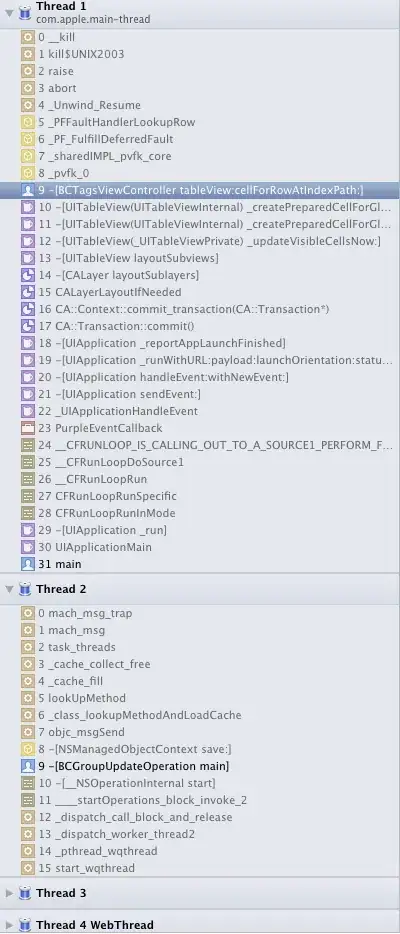

After data loading completed I had a staging table structure like this:

Then I create temp table based on stg one and it has been working fine until today when new created tables suddenly received nvarchar(max) type instead of nvarchar(4000):

Temp table creation now is failed with obvious error: Column 'currency_abbreviation' has a data type that cannot participate in a columnstore index.'

Why the AutoCreate table definition has changed and how can I return it to the "normal" behavior without nvarchar(max) columns?