A rather complex (depending on standards) query running on a table with about 1M records. Creating some temporary tables and building arrays and jsonb.

On localhost I get average of 2.5 seconds. On Google SQL I get 17-19 seconds.

Note:

- Other queries, like simple selects, are faster on server than on local. As it should.

- I did run vacuum, rebuilded all index, checked for hanging process. All good.

- I played with resources. Created an instance with 8 VCPU and 16Gb Ram. Almost same result.

- I checked proc, mem, disc. No problem.

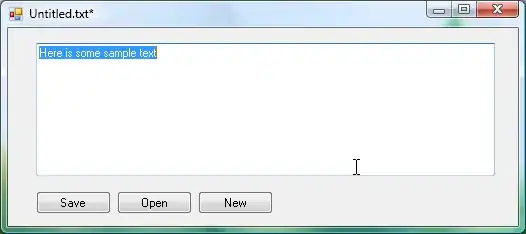

Here are the results from explain analysefor the most slow query. and here for local one

and here for local one

What could possibly be?

Possible duplicate with this but it's not answered, has 2 years and has no tags. I'll try my luck.

L.E. explain analyse verbose in text

Update on public.customers (cost=30.38..207.32 rows=127 width=104) (actual time=13105.151..13105.154 rows=0 loops=1)

Planning Time: 1.852 ms

Execution Time: 13105.306 ms

-> Hash Join (cost=30.38..207.32 rows=127 width=104) (actual time=316.371..13091.937 rows=42 loops=1)

" Output: customers.id, customers.company_id, customers.sap_id, customers.parent_sap_id, jsonb_set(customers.stats, '{consolidatedSales}'::text[], jsonb_build_array(calculateconsolidatedstats(customers.id, customers.sap_id, ((date_part('year'::text, (CURRENT_DATE)::timestamp without time zone) - '2'::double precision))::integer), calculateconsolidatedstats(customers.id, customers.sap_id, ((date_part('year'::text, (CURRENT_DATE)::timestamp without time zone) - '1'::double precision))::integer), calculateconsolidatedstats(customers.id, customers.sap_id, (date_part('year'::text, (CURRENT_DATE)::timestamp without time zone))::integer)), true), customers.tags, customers.account_manager_id, customers.created_by_id, customers.customer_status_id, customers.created_at, customers.updated_at, customers.ctid, parents_with_sales.ctid"

Inner Unique: true

Hash Cond: (customers.id = parents_with_sales.id)

-> Seq Scan on public.customers (cost=0.00..74.54 rows=254 width=924) (actual time=0.021..0.419 rows=254 loops=1)

-> Hash (cost=27.88..27.88 rows=200 width=10) (actual time=0.086..0.088 rows=42 loops=1)

" Output: parents_with_sales.ctid, parents_with_sales.id"

" Output: customers.id, customers.company_id, customers.sap_id, customers.parent_sap_id, customers.stats, customers.tags, customers.account_manager_id, customers.created_by_id, customers.customer_status_id, customers.created_at, customers.updated_at, customers.ctid"

Buckets: 1024 Batches: 1 Memory Usage: 10kB

-> HashAggregate (cost=25.88..27.88 rows=200 width=10) (actual time=0.059..0.072 rows=42 loops=1)

" Output: parents_with_sales.ctid, parents_with_sales.id"

Group Key: parents_with_sales.id

Batches: 1 Memory Usage: 40kB

-> Seq Scan on pg_temp_7.parents_with_sales (cost=0.00..22.70 rows=1270 width=10) (actual time=0.010..0.019 rows=42 loops=1)

" Output: parents_with_sales.ctid, parents_with_sales.id"